Because not everyone understands the differences between grid computing and cloud, let’s start with a quick overview. While cloud computing and cloud technology are not synonymous, there are several synergies between the two, and combining the two makes a lot of sense.

Instead of a single computer, grid computing involves handling a computing issue with only an army of devices working in parallel. This method has several advantages:

Time savings:

With 30 dedicated machines, you could potentially complete a month’s worth of processing work for a Windows machine in just one day. By leveraging thousands of volunteer computers, the SETI@home project, the world’s largest grid computing initiative, has recorded 2 million years of aggregate computer power time in only 10 years of sequential time.

Less expensive resources:

You can achieve the necessary workload without buying expensive servers featuring high-end CPUs and memory by using more budget-friendly materials. Granted, you’ll have to purchase more, but the smaller, less expensive machines are easier to repurpose.

Reliability:

The computer and network system should anticipate individual computer failures or changes in availability and ensure that these do not hinder the successful completion of the task.

Grid computing is not appropriate for all forms of work. The work is split into smaller tasks, which are completed in parallel by a loosely linked network of computers. Smart infrastructure is required to distribute the duties, collect the outcomes, and operate the system. Grid computing was first used by people who wanted to address massive computer issues, which is unsurprising.

Grid computing is now employed in genomics, actuarial calculations, astronomy analysis, & film animation rendering, among other fields. But that’s changing: network virtualization is gaining traction as a solution to broad business problems, and the arrival of cloud computing will hasten this trend. Grid computing does not have to be used for massive computing tasks, and compute-intensive projects are not the only type of work that can profit from it. It is a suitable fit for any activity that is repetitive.

Grid computing may make sense if you’re a large company with 4 million monthly invoices or a small firm with 1,000 credit card applications to approve. Because network virtualization is a couple of years older than cloud technology, much of today’s modern grid computing isn’t cloud-based. The following are the most prevalent approaches:

Dedicated machines: buy a lot of computers and set them aside for grid work.

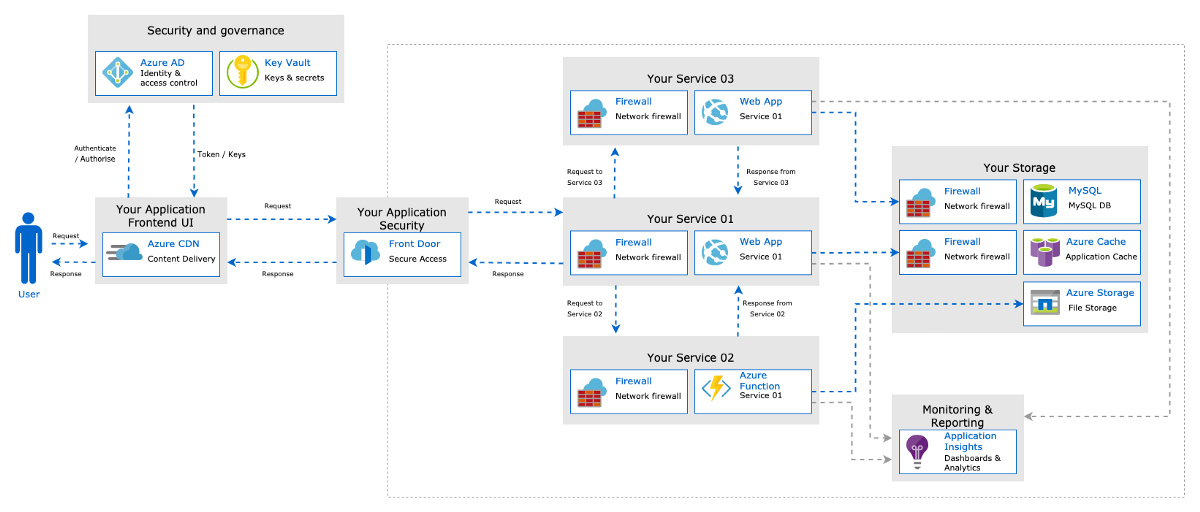

Image Source: Link

Network cycle stealing: when other machines in your business are inactive, such as overnight, repurpose them for grid work. At night, a business desktop might transform into a grid worker.

Applying the cycle stealing principle on a global scale via the Internet is known as global cycle theft. With almost 300,000 active computers, that’s how the SETI@home initiative works.

Cloud computing provides an alternative to grid computing that has several appealing features. This includes a flexible scale-up/scale-down business model and the availability of much of the infrastructure that was previously custom-developed.

Grid Computing Business Applications

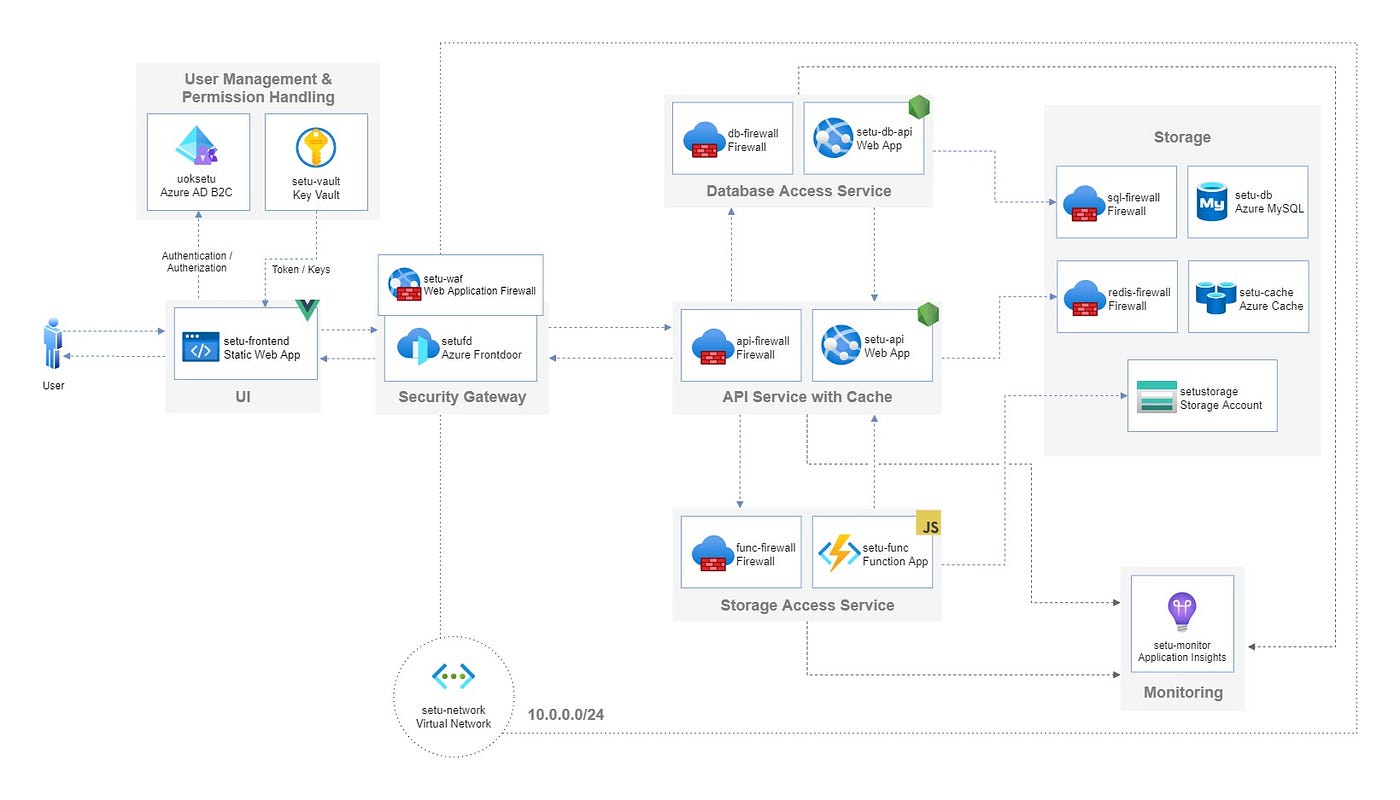

Image Source: Link

Grid computing must have compelling business applications to gain broad acceptance. Let’s look at three types of commercial applications that benefit from grid computing while also providing significant business value. Data mining is the first example. Big data and other types of data mining can uncover fascinating patterns and relationships in corporate data.

A supermarket could employ data mining to analyze buying habits and make strategic decisions about whether or not to put particular items on sale. You can use Data mining by a DVD rental chain to identify which other rentals to recommend to people, depending on what they’ve just rented. An e-commerce website could use data mining to analyze real-time user navigation patterns and personalize the offers and adverts displayed.

How decisioning plays an important role?

The second scenario is decisioning, which necessitates the execution of a battery of the forward business rules in addition to making a judgment call. In some situations, you must make choices rapidly, but the computations required are difficult. You can use the grid’s parallelism to achieve faster response times that don’t decrease as the workload grows.

- A credit institution could use a decision engine to calculate credit scores.

- A financial institution could use a decision engine to approve loans quickly.

- An insurance firm could use a decision engine to calculate risk and allocate policy rates to applicants.

The third scenario is batch processing. You can use it when you need to manage bursts of high workloads. However, it may not have the necessary in-house capability.

A tax provider must generate and deliver electronic tax documents. The workload is high during tax season and moderates during the rest of the year. Utilizing a grid method for document creation and delivery saves money by preventing the need to invest in extensive in-house capabilities that would often remain unused.

An advertising campaign may require sending massive amounts of email accounts, faxes, mailings, or voice messages. The grid’s parallelism ensures the campaign is sent quickly and synchronously, no matter how extensive the workload is.

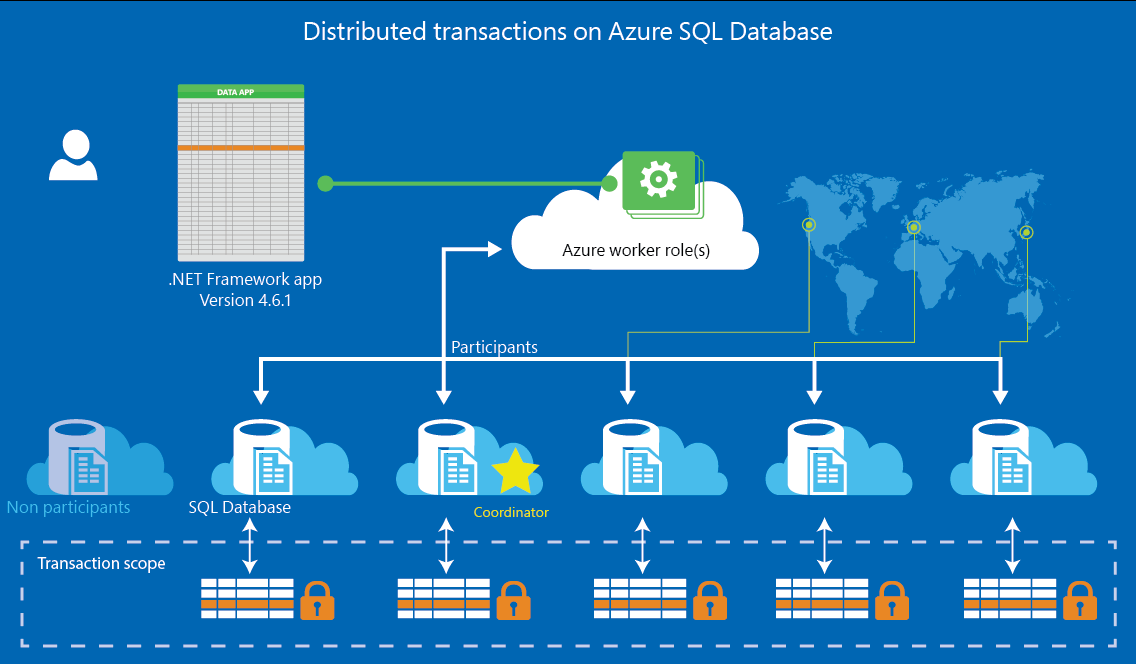

Azure Services for Distributed Computing

Azure offers a suite of services designed to simplify the process of distributed computing. A foundation including virtual machines, storage, networking, and data management capabilities underlies these services. For example, Azure Batch enables the creation and execution of cloud-based applications at scale with job scheduling and resource management across multiple nodes. Additionally, it provides serverless compute capacity through its Function App service for small tasks such as image processing or web requests.

Users can also provision dedicated clusters for specific workloads via HDInsight Services without having to manage underlying infrastructure components or worry about software compatibility issues between various providers. Furthermore, developers can leverage powerful analytics tools like Power BI or Stream Analytics to gain insights into their application data in real time from anywhere in the world through its Big Data solutions provided by Microsoft’s Intelligent Cloud Platform (ICP). Finally, leveraging DevOps best practices with tools such as Visual Studio Team Services allows teams to build quickly deploy highly scalable applications on demand. Automation Accounts ensure they keep them up and running reliably 24/7 regardless of peak traffic conditions.

Distributed Data Processing on Azure: Batch and Stream Processing

Distributed data processing on Azure enables you to quickly and easily analyze vast amounts of data in less time. With the use of robust solutions like Batch and Stream Processing with Apache Spark, one can efficiently process large streams of structured or unstructured data in an effective manner. From ingesting to transforming your data into actionable insights, Azure Data-Processing-as-a-Service helps organizations accelerate business decisions through modern analytics frameworks. iIt includes machine learning and AI. With features like built-in security policies that protect against cyber threats, big data replication across cloud providers for failover scenarios, performance scalability and Cost optimization capabilities – it’s easier than ever before to put powerful distributed computing infrastructure into production. Get more out of your big datasets using Microsoft’s distributed data processing service today!

High-Performance Computing (HPC) on Azure

HPC is designed to help organizations tackle big computing and data-intensive workloads. This includes analytics, simulations, and AI. Azure delivers its HPC capabilities through a combination of high-performance infrastructure. It also includes a tightly integrated software stack, and advanced storage options to meet the needs of large-scale compute-intensive workflows. With Azure’s powerful computing resources at their fingertips, developers can increase productivity by running sophisticated applications faster on Azure HPC clusters. They can still saving costs compared to traditional hardware solutions.

Azure also offers several scalable features. This make its easier for customers to choose the right size and power for their particular workloads. This includes Microsoft CycleCloud. It enables users to create an optimized cluster in minutes or even deploy specialized machine learning configurations with minimal coding requirements from custom templates. Furthermore, customers will find that many industry-leading tools. This includes Mottusing Machine Learning Server that are available on Azure.

Best Practices for Distributed Computing on Azure

The cloud has revolutionized the way businesses operate and provides distributed computing on a large scale. Cloud computing solutions such as Microsoft’s Azure platform allow organizations to take advantage of virtual machines, container technology, automation, and a range of cloud-delivered services. However, with so many options available to business owners, determining which ones best suit their needs can be challenging. Here are some best practices for using Azure’s capabilities in order to maximize performance and lower costs:

- Make use of auto-scaling techniques by setting up multiple clusters that can automatically expand or contract based on load levels. Doing this allows you to dynamically respond quickly when traffic levels suddenly increase or decrease without having to manipulate your infrastructure every time manually there is a change in usage patterns.

- Utilize bin packing algorithms for proper clustering before deploying resources across disparate locations. This helps optimize resource utilization while minimizing overall cost.

- Leverage automated deployment processes and containers as much as possible instead of relying on manual workflows if those options are available. Not only does it reduce complexity, but it also provides more reliable deployments over time since human errors can easily lead to larger issues down the line.

Conclusion

The conclusion of distributed computing in Microsoft Azure is that it is an effective and efficient approach to cloud computing. By using distributed computing, organizations can reduce their costs by using the available resources more effectively and efficiently. Distributed computing allows users to access data from multiple locations simultaneously, ensuring increased scalability and productivity. It also enhances security by allowing data storage on multiple servers rather than a single server located at a single location. Therefore, it reduces the risk of data theft or malicious attacks. Lastly, distributed computing facilitates faster application development cycles by providing for parallel processing capabilities allowing developers to complete projects quickly while saving time and money on resources allocated for traditional methods.

FAQs

1. What is distributed computing in Microsoft Azure?

Distributed computing in Microsoft Azure refers to the process of distributing computational tasks across multiple interconnected resources within the Azure cloud infrastructure to process large datasets or complex workloads efficiently.

2. What are the benefits of distributed computing in Azure?

The benefits of distributed computing in Azure include scalability, high availability, fault tolerance, cost-effectiveness, and the ability to leverage a wide range of cloud services and tools for data processing, analytics, and machine learning.

3. What tools and services does Azure offer for distributed computing?

Azure offers several tools and services for distributed computing, including Azure Virtual Machines for running custom workloads, Azure Kubernetes Service (AKS) for containerized applications, Azure Batch for batch processing, and Azure HDInsight for Apache Hadoop and Apache Spark clusters.

4. How does Azure ensure scalability and performance in distributed computing?

Azure provides a highly scalable and distributed infrastructure, allowing users to dynamically scale computing resources up or down based on demand. Additionally, Azure’s global network infrastructure and high-performance computing capabilities ensure optimal performance for distributed computing workloads.

5. How does fault tolerance work in distributed computing on Azure?

Azure employs built-in fault tolerance mechanisms, such as automatic instance recovery and data replication across multiple datacenters and regions. This ensures that distributed computing workloads remain resilient to hardware failures and other disruptions.

6. How does Azure help manage the cost of distributed computing?

Azure offers flexible pricing options and cost management tools to help users optimize their spending on distributed computing resources. This includes features such as reserved instances, spot instances, and budget alerts.

7. Can I integrate Azure’s distributed computing services with other Azure services?

Yes, Azure’s distributed computing services can be seamlessly integrated with other Azure services, such as Azure Storage for data storage, Azure Event Grid for event-driven computing, and Azure Machine Learning for machine learning workloads.

8. What types of distributed computing workloads are suitable for Azure?

Azure can handle a wide range of distributed computing workloads, including data processing and analysis, machine learning and artificial intelligence, batch processing, real-time streaming, and high-performance computing (HPC).

9. How can I get started with distributed computing on Azure?

To get started with distributed computing on Azure, you can explore Azure’s documentation, tutorials, and sample projects. Additionally, you can leverage Azure’s free trial and credits to experiment with distributed computing workloads at no cost.

10. Are there any best practices for distributed computing on Azure?

Yes, there are several best practices for distributed computing on Azure, including optimizing resource utilization, designing fault-tolerant architectures, leveraging managed services, monitoring performance and costs, and following security and compliance guidelines. Azure’s documentation and support resources provide guidance on implementing these best practices.