This toolkit empowers AI developers, data scientists, and researchers and helps them to accelerate analytics pipelines and data sciences using Intel architecture. The components in use are manufactured using libraries of one API. This helps in the optimization of low-level computation. The toolkit helps in the maximization of the performance. It does so by pre-processing the developmental model, which is efficient, using the machine learning capabilities.

These toolkits are best suited for some of the following scopes of work:

- Training for deep learning happens seamlessly on these devices. These systems are primarily used for achieving the task that demands high performance. These systems are helpful in the integration of inference in a very quick time when it comes to the deployment of workflow. These systems come with retrained models and are equipped with low precision tools.

- Suppose you are looking to achieve a drop in acceleration in machine earning wok flows and data preprocessing. In that case, you will find several packages such as python, Modin, and XGBoost readily optimized and available.

- Intel extends direct access to AI optimizations and analytics to ensure that all software works seamlessly together.

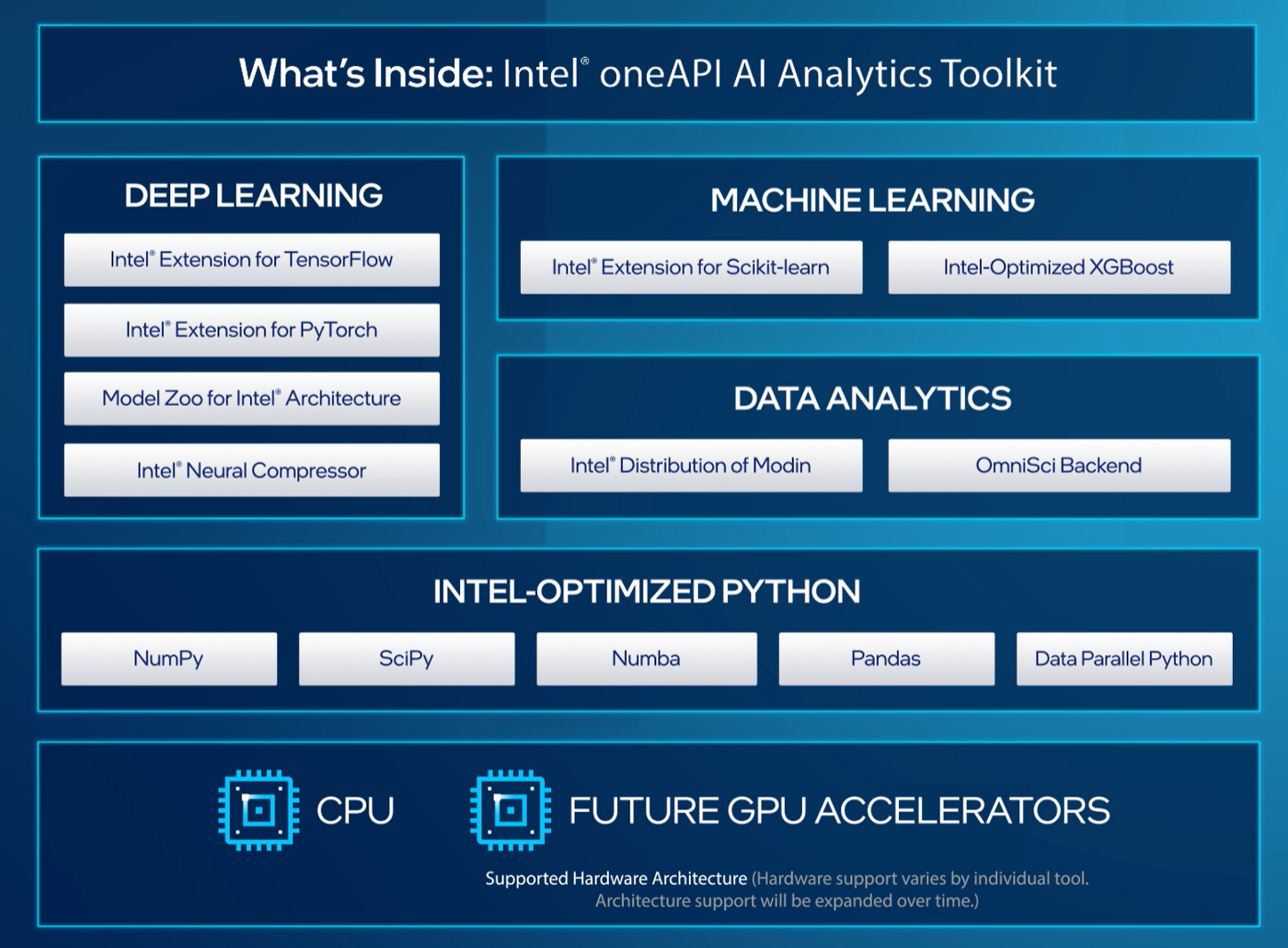

These toolkits do not demand any special modifications to current projects to be incorporated. To shed more light on the topic, the AI kit is inclusive of the following:

- The deep neural network library of intel one API has been included as default in Pytorch. It has been made the default kernel for the math library, which is very helpful in deep learning.

- TensorFlow and its optimization help integrate the primitives into the runtime of TensorFlow, which enables the acceleration of performance.

- These systems promise to improve the speed of your python applications, and their deployment has almost no requirement for editing the code. This distribution and process are efficiently integrated with Intel®oneAPI Data Analytics Library, Intel® Performance Libraries, and Intel®oneAPI Data Analytics Library.

These tools help in accelerating end-to-end analytics and data science pipelines. Some of the features that are the favorites amongst the experts are mentioned below:

- The freedom to leverage the extremely efficient and powerful frameworks optimized by Intel. These help in full utilization of Intel architecture and helps in yield quality high performance for inference and training.

- Pretrained models for machine learning are a great help in expediting development. This is made possible due to optimizations by Intel.

- Strategies can be tuned automatically to exact accurate levels and help achieve additional objectives such as model size, performance, and memory footprint, which uses optimizations of low precision.

- The machine learning model can be made very accurate, and its performance increased by using various algorithms such as XGBoost and scikit-learn.

- These systems can be scaled efficiently into clusters and perform machine learning in a distributed manner.

- Python is one of the fast-growing and more popular languages used for data analytics and AI. Intel architectures can be seemly optimized for it.

- The systems no can help you process larger sets of data. This is achieved by making drop-in enhancements in performance to the python code in use.

- Complex processes involving vectorization, multithreading computations of scientific scale, or memory management can all be done seamlessly across the cluster.

- These systems have simplified scaling when it comes to data frames which are MultiNode.

- Pandas’ workflows can be scaled seamlessly to multi nodes and multi-cores with as simple as just a line of coding. This is achieved through Intel® Distribution of Modine, a DataFrame that is very light and can be run in parallel.

How oneAPi will revolunitize the market?

Understandably, oneAPi is a project of great ambition. The components used to set up the Ai will determine the future of many people in this industry. These toolkits will always support hardware in case more accelerations are needed. One API can be looked at as an ecosystem designed for the future, keeping in mind various accelerator components by Intel. Several hours additionally are spent by practitioners and scientists of data with tasks that can be better managed, such as memory problems or data prep. unfortunately, and the APIs are mostly available for CPUs and not GPUs.

People who use Conda can also use miniconda and Anaconda and install them using this toolkit. This workflow is regarded as clean and much better in comparison, and Miniconda helps in providing conda and python for its base. This is also preferred over a complete Anaconda setup because it is much easier to create ‘envs’ that are dedicated using versions and packages that are already needed to complete the tasks at hand. Pytorch is regarded as a great framework for machine learning. It can be used to make frameworks for general scientific computing, which are very efficient. It also performs excellent when it comes to the usage of AI and ML.

FAQs

1. What is the Intel oneAPI AI Analytics toolkit?

The Intel oneAPI AI Analytics toolkit is a comprehensive suite of tools designed to accelerate AI and analytics workflows on Intel hardware. It provides developers with a unified programming model and a set of optimized libraries, frameworks, and tools to build, deploy, and optimize AI, machine learning, and data analytics applications.

2. What are the key components included in the Intel oneAPI AI Analytics toolkit?

The toolkit includes various components such as Intel oneAPI Base Toolkit, Intel oneAPI HPC Toolkit, Intel oneAPI IoT Toolkit, Intel oneAPI Rendering Toolkit, and Intel oneAPI IoT Analytics Toolkit. Additionally, it offers specialized libraries like Intel oneAPI Deep Neural Network Library (oneDNN), Intel Distribution for Python, and Intel Math Kernel Library (Intel MKL).

3. What programming languages are supported by the Intel oneAPI AI Analytics toolkit?

The toolkit supports multiple programming languages, including C, C++, Fortran, Python, and Data Parallel C++ (DPC++). DPC++ is an extension of C++ designed for heterogeneous computing that allows developers to write code that can be executed on CPUs, GPUs, FPGAs, and other accelerators.

4. How does the Intel oneAPI AI Analytics toolkit help developers accelerate their workflows?

The toolkit provides optimized libraries and frameworks for AI and analytics tasks, enabling developers to leverage the full potential of Intel hardware. By using these libraries and tools, developers can achieve better performance, scalability, and efficiency in their applications.

5. Can the Intel oneAPI AI Analytics toolkit be used for both training and inference in AI models?

Yes, the toolkit supports both training and inference stages of AI model development. Developers can utilize libraries like oneDNN (formerly known as Intel MKL-DNN) for deep learning training and inference acceleration, as well as other tools and frameworks included in the toolkit for various AI tasks.

6. Is the Intel oneAPI AI Analytics toolkit compatible with other popular AI and machine learning frameworks?

Yes, the toolkit is designed to be interoperable with popular AI and machine learning frameworks such as TensorFlow, PyTorch, and scikit-learn. Developers can integrate these frameworks with the toolkit to take advantage of Intel hardware optimizations and performance improvements.

7. What resources are available for developers interested in learning more about the Intel oneAPI AI Analytics toolkit?

Intel provides documentation, tutorials, code samples, and other resources on their official website to help developers get started with the Intel oneAPI AI Analytics toolkit. Additionally, there are forums, community support channels, and training programs available for developers seeking assistance or further guidance in using the toolkit.