This is the release where the subset of Machine Learning called Deep Learning begins and there is an introduction to the kind of artificial intelligence seen in movies.

How exactly are Artificial Intelligence, Deep Learning and Machine Learning related?

These neural networks come from the branch of computer science called Deep Learning. Deep learning is a subset of machine learning which provides the ability to machine to perform human-like tasks without human involvement.

Neural networks are now a prevalent buzzword, featured in various contexts and even depicted in movies.

In reality, these are algorithms with some maths behind them that do specific tasks on given datasets. These neural networks were inspired by the functioning of the human brain. Just like our brain perceives and processes data from all around us so do Neural Networks. These Neural Networks take large datasets and figure out patterns from them to produce the desired output.

People also call Neural Networks ANNs (Artificial Neural Networks). They are computational models that work on everything from voice recognition, and image recognition to Robotics.

The term Neural comes from the human brain’s Neurons and the word Network because it combines a bunch of artificial neuron layers together as a network to get the output.

ANNs learn just like a child by taking and studying the examples given. Each ANN algorithm is specifically curated for different applications but the basic maths behind them remains the same.

You can use the neural Networks because a neural network once you train them can be an expert in the type of information it can analyse. These neural networks can create their own representation of information received during the learning time.

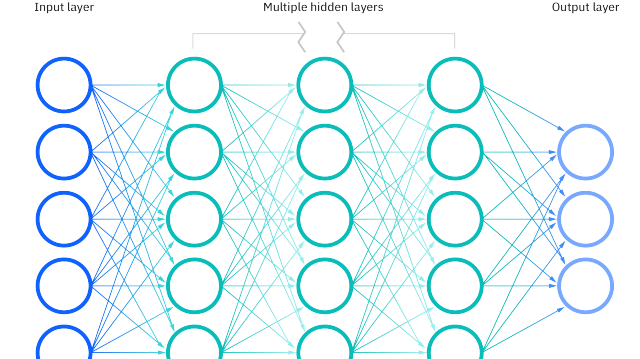

Each neural network is made of certain layers. The 3 main types of layers are

- Input Layer: Here raw information is taken in by the network.

- Hidden Layer: Each hidden layer performs certain activities that vary the weights and biases and create connections with the output layer.

- Output Layer: This is the output given by the neural network.

This is a broad idea of how a neural network looks.

In programming, we depict artificial neural networks (ANNs) as weighted directed graphs, where each neuron acts as a node, and connections between neurons are visualized through edges with specific weights. Typically, inputs take the form of a vector or matrix denoted as x(n), where n signifies the number of inputs.

In neural networks, each input undergoes multiplication by a weight, which holds crucial information for problem-solving. Inside an artificial neuron, we sum the weighted values, add a bias to prevent a zero sum and scale the system. Then, you an use activation functions to regulate this sum, which can range from 0 to infinity.

These activation functions help mold the data into the desired output. There are various types of linear and non-linear activation functions.

You can use Neural Networks for different tasks.

- Classification Neural Networks: You can use them to classify patterns or images into predefined classes. Example: Looking at handwritten digits and classifying them into a digit.

- Prediction neural networks, such as stock market predictors, undergo training to generate forecasts and predictions using the data they have been trained on.

- Clustering Neural Networks: These neural networks identify unique parts of the dataset and cluster them into various classes without having predefined labels.

There are more types such as GANs which are very powerful neural networks capable of synthesising their own data.

Running neural networks requires substantial computational power, often utilizing GPUs and TPUs. Usually, you can use the GPUs for tasks like image classification and object detection algorithms.

Training the Algorithms:

Neural networks typically advance from the input layer to the output layer in an almost linear fashion, introducing weights and/or biases at each layer by default. To enable effective learning, neural networks initially train with a set of predefined rules.

You can achieve this using one of 2 major methods:

- Gradient Descent Algorithm: This is the most basic training algorithm for supervised learning algorithms where the algorithm finds the difference between actual output and output given by the neural network and tried to minimize the errors.

- The Back Propagation Algorithm extends gradient descent by sending the error back through a hidden layer to the input layer. You can primarily use it in multilayered networks.

After training, the neural network excels in a specific category and can produce outputs for test datasets.