What does a data network mean?

Image Source: Link

In the past, organizations had also attempted to solve data access issues through either point-to-point integration or the implementation of data hubs. When data has been highly dispersed and siloed, neither option is appropriate. Point-to-point integrations incur exponentially increasing costs for each additional endpoint that must be connected, making this method non-scalable. Data hubs promote the introduction of applications and source materials but increase the cost and the complexity of maintaining data quality and integrity within the hub.

The data fabric is a starting to emerge architecture created to identify the data challenge hybrid data environment presentment. Its core concept is to strike a balance between devolution and globalization by serving as the digital connective between data endpoints.

Through technologies such as mechanization and modernization of integration, federated governance, and activation of information, a data cloth architecture enables dynamic and smart data orchestration across a distributed scenery, creating a network of instantly accessible data to power an enterprise.

A data fabric is independent of implementation platforms, information processes, geographic locations, and architectural strategy. It enables the utilization of information as an asset to the organization. A data fabric guarantees that your diverse types of data could be significant aspects, accessed and administered efficiently and effectively.

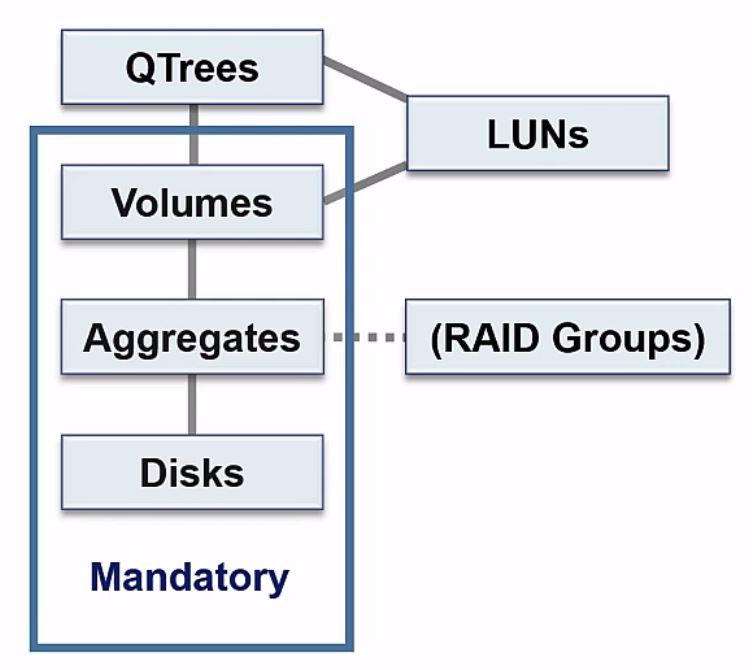

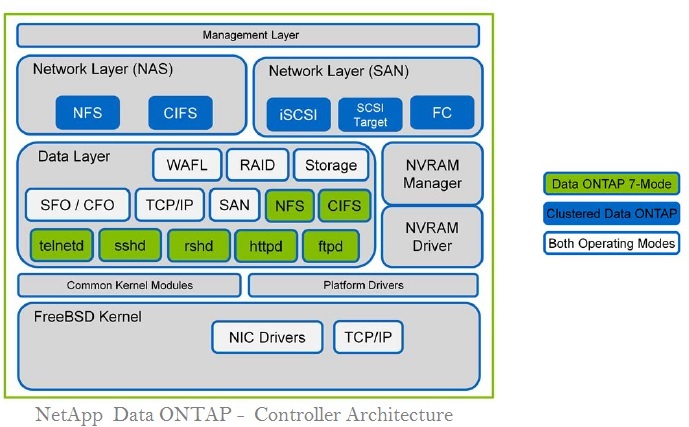

Core Components of NetApp Storage Architecture

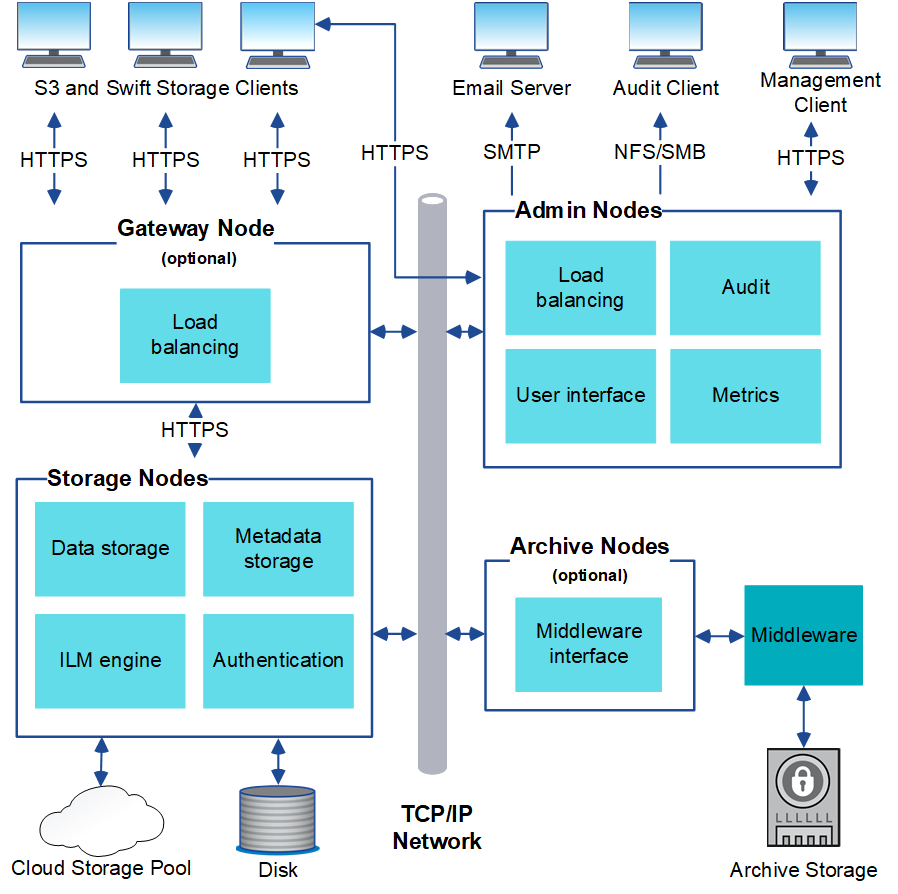

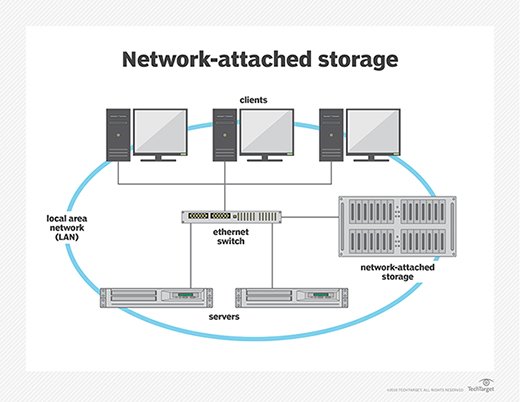

NetApp’s storage architecture is built around several core components: the hardware, the Data ONTAP operating system, and SnapVault. These components provide a comprehensive suite of capabilities designed to optimize enterprise data management across physical, virtual and cloud environments.

The NetApp hardware platform has been designed to be versatile and highly reliable. It provides raw capacity for all types of applications and workloads. The Data ONTAP software provides automated management of both SAN and NAS configurations as well as advanced features such as thin provisioning, deduplication, snapshotting, replication, and more.

Finally, SnapVault provides users with secure remote backup capabilities that greatly reduce recovery time objectives (RTOs). All these components are combined in a simple yet powerful way giving IT professionals an easy-to-deploy total storage solution for their organization’s needs.

Functionality and fundamentals of an info fabric

Image Source: Link

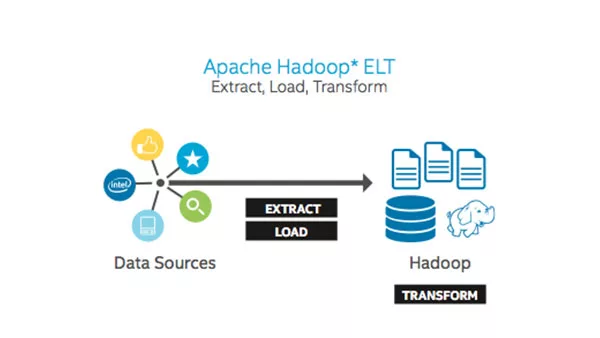

A data management framework that empowers the full scope of unified data management capabilities, including discovery, leadership, curation, and orchestration, forms the basis of the information fabric architecture.

A data fabric evolves from data management ideas such as DataOps, which only focuses on established practices to enhance the amount of data operationalization. It is based on a shared architecture and cutting-edge technology capable of meeting the requirements posed by the extreme diversity and dispersion of data assets. A data fabric may be divided logically into four functionalities (or components):

Knowledge, understanding, and semantics

Image Source: Link

It offers a marketplace as well as a shopping experience for data

Enriches automatically discovered data assets to knowledge and semantics, enabling consumers to locate and comprehend the data. Unified compliance and governance

Unified compliance and governance

Image Source: Link

Claims to support a global, truly united view as well as policy enforcement while allowing local management of metadata

Applying policies to data automatically affects international and local regulations

Utilizes advanced features to automate information asset categorization and curation

Establishes automatically query-able connect routes for all cataloged assets for enhanced data activation and Intelligent integration

Streamlines the tasks of a data engineer by automating the creation of data flows and pipelines across distributed data sources.

Enables self-service data ingestion and access to data over any data with strict local and global data protection policy enforcement

Determines the optimal execution automatically through streamlined workload distribution, self-tuning, and schema drift correction.

Orchestration and existence

Image Source: Link

Enables data pipeline construction, testing, operation, and monitoring.

Incorporates AI capabilities into the data lifecycle to computerize tasks, self-tune, self-heal, and detect data source changes, enabling automated updates.

The business advantages of a data cloth

Data only provides business value once comprehended and made available to all users and applications within an organization. A data fabric, when implemented correctly, ensures that values are accessible all through the organization in the most efficient manner possible. Therefore, the cloth has three key advantages:

- Enable collaboration and self-service data consumption.

- Enabled by active metadata, automate administration, protection, and security.

- Automate data process engineering and enhance data integration across cloud-based hybrid resources.

- Enable data consumption as well as collaboration via self-service

Businesses gain greater insight by integrating information from diverse sources and analyzing a larger portion of the data produced daily. They can respond faster to shifting business demands. A data fabric expeditiously delivers data to those who require it. Self-service assists the company to also locate relevant data more quickly and devoting more time to gaining actionable insights from that data.

Storage Controller: The Brain Behind NetApp Storage

The storage controller is the heart of any NetApp system and plays a very important role in delivering high-performance, highly available data storage. It provides intelligence to manage both physical and virtual components that help ensure optimal performance for various applications. The controller helps aggregate, classify, prioritize, and balance data traffic across different nodes on the network while maintaining security standards. It also ensures reliable data access by using redundant networks and integrated failover services that support fault tolerance.

Additionally, it enables advanced enterprise features such as snapshotting for rollback capabilities, cloning for provisioning new servers/services faster from existing templates, deduplication to save disk space, and thin provisioning to optimize the utilization of resources. With its robust architecture and scalability options ranging from single node deployments all the way up to multi-host chassis clusters with active controllers paired in an HA configuration (High Availability), businesses can trust dependable operations without interruption thanks to NetApp’s leading storage technology – the Storage Controller!

Advantages of data cloth for autonomous data consumption:

Image Source: Link

Enterprise customers have a centralized location to discover, comprehend, shape, and consume enterprise-wide data.

A centralized data leadership, as well as lineage, enables users to comprehend the meaning of the data, its origin, and its relationship to other assets.

Extensive and customizable metadata administration is scalable and API-accessible.

Self-service connectivity to trusted and governed data enables collaboration between line-of-business users.

Active metadata automates governance, data protection, and security;

Image Source: Link

A dispersed active oversight layer for all initiatives diminishes compliance requirements risks by providing transparency and trust. It provides a high level of privacy laws and conformance by enabling instantaneous policy enforcement for all data access.

Using Machine learning and artificial intelligence technologies enables users of data fabric to increase their degree of technology, for instance, by extracting appropriate data governance based on the language and definitions throughout documents. This enables organizations to apply industry-specific governance in a few minutes, avoiding expensive fines and ensuring the ethical use of information wherever it resides.

Data fabric advantages for governed virtualization:

Image Source: Link

- The productivity, security, and agility of data engineers, scientists, and business analysts are enhanced.

- Multiple sources of global data appear as a single database.

- Massive-scale discovery of identifying information (PII) and essential data elements is possible.

Data ONTAP: The Operating System for NetApp Storage Systems

Data ONTAP is an advanced operating system (OS) developed by global technology leader NetApp for its storage systems. It offers unparalleled agility, scalability and flexibility that helps organizations of all sizes achieve their business goals without any hassle. Data ONTAP OS provides high performance data management services such as automated tiering, unified storage support (NAS & SAN), thin provisioning, deduplication and cloud-based backup solutions in a single package to help businesses better manage their growing data needs. Moreover, it optimizes applications with a comprehensive suite of virtualization features while also ensuring robust security control over stored information. The entire platform can be managed remotely using the intuitive and user-friendly web console or command line interface offered by Data ONTAP OS for reduced IT personnel workloads and improved productivity..

Conclusion

Overall, NETAPP storage architecture provides an advanced solution to manage and move large amounts of data. It offers a comprehensive set of features that can be implemented in a scaled way according to customer needs. The users can leverage the flexibility of the system and configure it to meet their specific requirements. By using these features, businesses will benefit from improved performance, scalability and reliability of their storage solutions while reducing total cost of ownership. Additionally, all NetApp components are designed for easy deployment, maintenance and support which further extend its value proposition making this technology one of the best fit solutions for both modern small-to mid-sized enterprises as well as larger organizations with complex workloads.

Please reply back as I’m attempting to create my very own site and would love to find out where you t this from or what the theme