Through years of research, NVIDIA has empowered IT leaders to manage and scale their data centers by adopting NVIDIA data center solutions found on GPU.

Data centers tackle complex challenges, from data analytics and high-performance computing (HPC) to artificial intelligence and rendering services. The NVIDIA computing platform accelerates from end to end, encompassing both software and hardware. It helps the enterprises by giving them a blueprint for an infrastructure that is secure and can provide support for the development of implementations as well as deployment of it. And it can manage so across all the workloads.

NVIDIA has worked on the following solution to cater to this extremely demanding exercise.

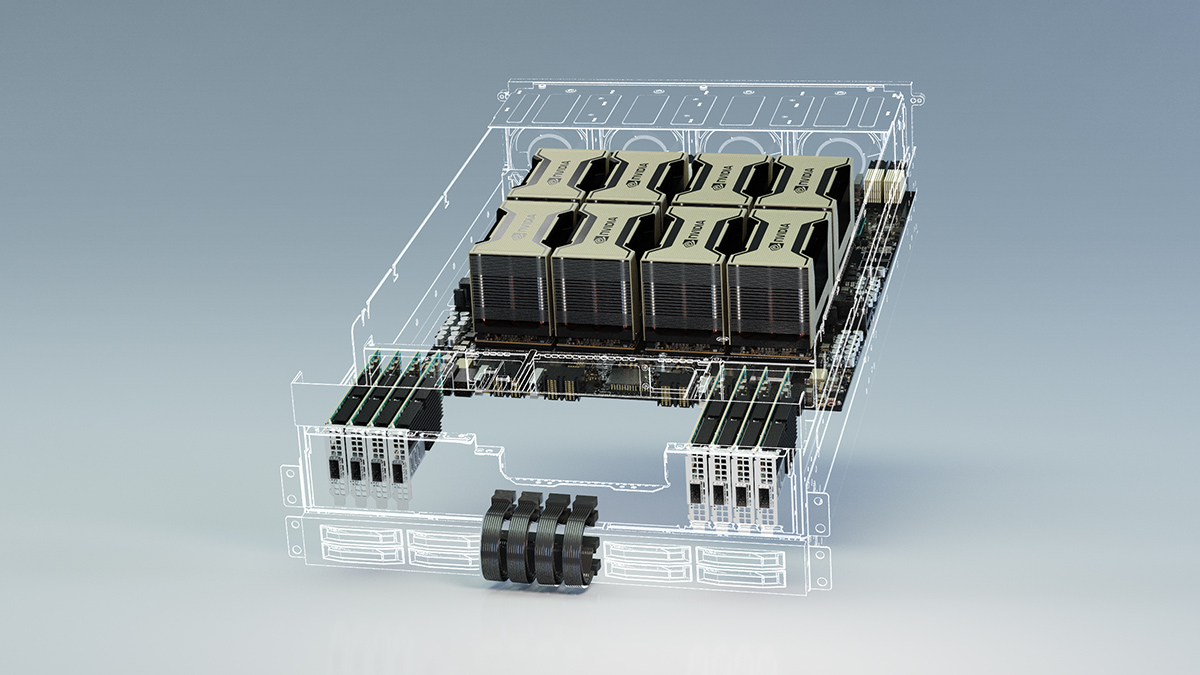

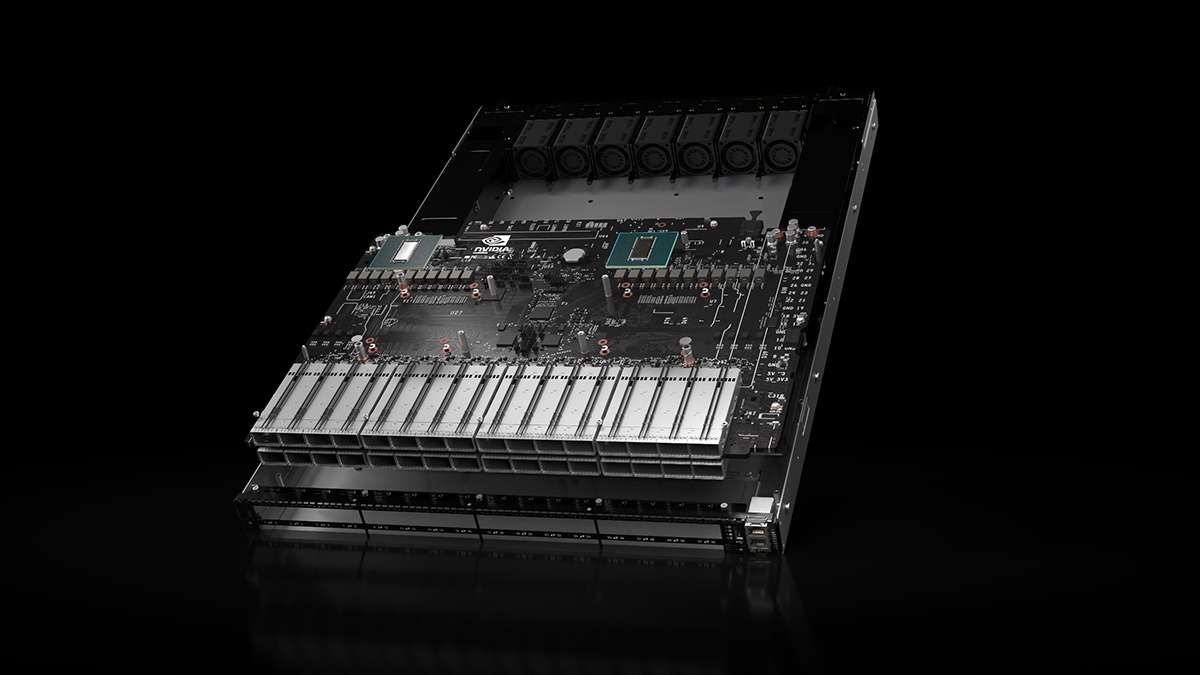

Hopper architecture GPU

This incredible architecture is helping to power the coming generation of acceleration in computation with a performance that has been unprecedented to date. It extends to enterprises’ scalability and extreme security, and it has the ability to scale workloads that are diverse and do so securely. Hopper is the perfect partner for innovators who aspire to do brilliant work, Whether they are exascale HPC enterprises or small organizations, in the fastest time possible.

Hooper architecture claims to be the next engine for catering to the world’s demand for infrastructure for artificial intelligence. This is considered a leap that can be termed massive in accelerated computation, and several technological breakthroughs had to be crossed to achieve these results.

This architecture has been built using over transistors which amount to a whopping 80 billion. It uses the TSMC 4N, which is cutting edge in its efficiency. Hopper boasts over five groundbreaking features that empower the NVIDIA H100 Tensor Core GPU, resulting in nearly 30 times the speed of previous-generation AI inferences, including NVIDIA Megatron 530B, which was the world’s largest chatbot and language generative model.

Transformer engine

The Hopper architecture further advances the Tensor core technology by NVIDIA with the help of the Transformer engine. This has been designed to help in the acceleration of training of models of artificial intelligence. These have the capabilities to apply a mix of FP16 and FP8 pricions to achieve the dramatic acceleration of calculations of artificial intelligence for transformers. The floating point also triples, achieving more operations per second compared to older generations. With the power combined of the Nvidia NVLink and the transformer engine, the Hopper Tensors cores help achieve a very high magnitude of speed on high-performance computation workloads as well as workloads of artificial intelligence.

Switch system of NVLink

In order to improve the speed of business, the exascale high-performance computation and the trillion parameters artificial intelligence demand seamless communication with very high speeds between all of the GPUs to provide acceleration at scale in a server made of the cluster.

The latest generation of NVLink is easy to scale up and interconnect. When combined with the latest external NVLink switch, this switch allows organizations to scale multiple GPUs across numerous servers at impressive speeds. NVLink now also comes with the feature to support computing of in-network type. Previously these were not easily available and were mostly available on the famous Infiniband.

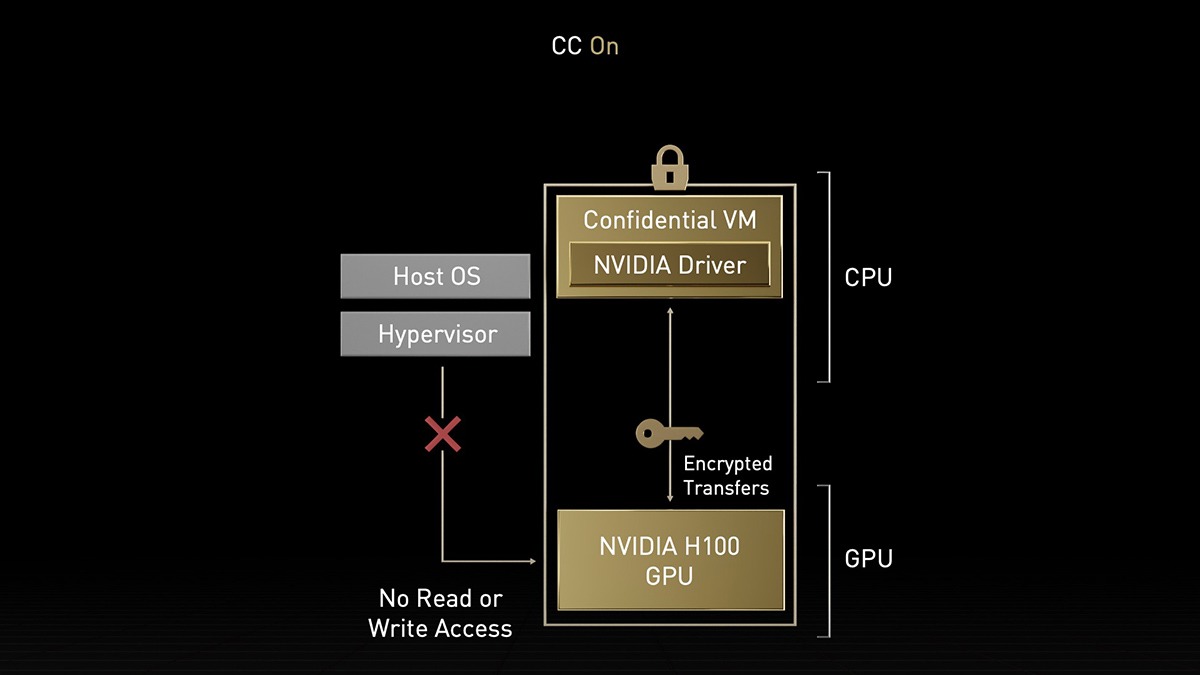

Confidential computing

The data residing in storage is encrypted, so it is while it is in transit. However, it is not when it is under processing. The confidential computing solutions now resolve this gap NVIDIA. This helps in the protection of data and its applications when they are in use. The Hopper architecture is one of the first platforms to feature accelerated computing with the ability to do confidential computing.

Th security which you can use is based on string hardware. It enables all users and helps them in running the applications right there on the premise or on the edge of the cloud. This computation platform ensures that no entity which doesn’t have authority will be able to modify or even view the codes of the application or the data when you are using it. This goes a long way in the protection of data, its confidentiality, and its integrity.

Muti GPUs

The hopper architecture further enhances the Muti GPUs. It enables then by supporting multi-user, multi-tenant configuration across almost seven instances of GPUs. This is a great help when it comes to virtualized environments. It helps in the isolation of each instance when the hardware for confidential computation is in use. It is so at the hypervisor level. Each instance has a decoder dedicated to the videos.

FAQs

1. What are Nvidia Data Center GPU solutions?

Nvidia Data Center GPU solutions refer to a range of powerful graphics processing units (GPUs) specifically designed for high-performance computing (HPC), artificial intelligence (AI), and data analytics tasks within data center environments. These GPUs are optimized to handle complex computational workloads efficiently, offering substantial acceleration for various applications.

2. How do Nvidia Data Center GPU solutions differ from consumer-grade GPUs?

Nvidia Data Center GPU solutions are tailored for enterprise-level computing tasks, focusing on scalability, reliability, and performance in data center settings. Unlike consumer-grade GPUs primarily aimed at gaming and multimedia tasks, data center GPUs are engineered to handle heavy computational workloads around AI training, scientific simulations, deep learning, and more.

3. What are the key benefits of using Nvidia Data Center GPU solutions?

Some key benefits include:

- Accelerated performance: Nvidia Data Center GPUs leverage advanced architectures and parallel processing capabilities to deliver significantly faster computation speeds.

- Enhanced scalability: These solutions are designed to scale seamlessly to meet the evolving demands of data center workloads, enabling organizations to handle increasing data volumes and complexity.

- AI optimization: With dedicated Tensor Cores and AI-specific features, Nvidia Data Center GPUs excel in AI-related tasks, facilitating faster model training and inference.

- Energy efficiency: Nvidia incorporates power-efficient designs in its data center GPU solutions, helping organizations reduce operational costs and minimize their environmental footprint.

4. What types of workloads are suitable for Nvidia Data Center GPU solutions?

Nvidia Data Center GPU solutions are well-suited for a wide range of workloads, including:

- Deep learning training and inference

- High-performance computing (HPC) simulations

- Scientific computing and research

- Data analytics and visualization

- Virtualization and cloud gaming

- Rendering and media processing

5. Which industries commonly utilize Nvidia Data Center GPU solutions?

Industries that frequently leverage Nvidia Data Center GPU solutions include:

- Healthcare and life sciences (for medical imaging, drug discovery, and genomics)

- Financial services (for risk analysis, fraud detection, and algorithmic trading)

- Automotive (for autonomous vehicle development and simulation)

- Manufacturing (for predictive maintenance, quality control, and process optimization)

- Media and entertainment (for rendering, video processing, and content creation)

- Government and defense (for simulations, cybersecurity, and intelligence analysis

6. How does Nvidia support developers and enterprises in implementing Data Center GPU solutions?

Nvidia provides various resources and support services to help developers and enterprises integrate and optimize Data Center GPU solutions, including:

- Developer tools and SDKs for GPU-accelerated computing

- Training programs and certification courses for AI and GPU computing

- Collaboration with industry partners and ecosystem developers

- Dedicated technical support and consulting services

- Access to Nvidia’s GPU Cloud (NGC) platform for optimized software containers and pre-trained models

7. Are Nvidia Data Center GPU solutions compatible with popular data center architectures and frameworks?

Yes, Nvidia Data Center GPU solutions are designed to integrate seamlessly with leading data center architectures and frameworks, including:

- Nvidia DGX systems for AI research and development

- Nvidia A100 Tensor Core GPUs for HPC and AI workloads

- Nvidia CUDA parallel computing platform for GPU-accelerated computing

- Integration with popular frameworks like TensorFlow, PyTorch, and Apache Spark for deep learning and analytics applications

- Support for virtualization technologies such as Nvidia vGPU for GPU sharing and resource allocation in virtualized environments.