Deep Learning is a term that has taken over the tech jargon in recent times. However, not everyone who has come across the term understands it in detail. Here is a consolidated overview of the key concepts before going into the contribution of the NVIDIA GPU family for Deep Learning.

Difference between CPU and GPU

Compared to CPUs, or Central Processing Units, GPUs are more advanced. A CPU is a general-purpose processor designed to handle a broad range of tasks. GPU (Graphics Processing Unit) is a specialized processing Unit with enhanced capabilities of Mathematical Computation, programmed properly for machine learning tasks.

The work of a Central Processing Unit or CPU is central to the working of a computer. Every action in a computer system originates from the core command of the Central Processing Unit, or CPU, which is essential to the computer’s operation. The Central Processing Unit executes the core command for every action in a computer system. CPU, the computer’s brain, is adept at taking information, calculating the information, and aligning it with what and where it needs to. Computers work/operate through the logical frameworks of the CPU. The CPU includes several standard components, as listed below.

Cores

The CPU’s main architecture consists of the Cores. The Cores operate using the instruction cycle, where they pull instructions from memory, decode them into processing language, and execute them. With the multi-core CPU’s proliferation, there has been an immense power amplification with the cores.

Cache

The cache is superfast memory built into the CPU or in CPU-specific motherboards in an attempt to facilitate quicker access to data. The CPU is currently using that. Cache memory’s work is to amplify the speed of the CPU, which is faster than the fastest RAM.

Memory Management Unit

The MMU or the Memory Management Unit controls the movement of data between RAM and the CPU during the instruction cycle.

Control Unit

The control unit is the clock rate determines the frequency at which the CPU generates electric pulses and determines the brisk rate at which the CPU will conduct the functions of the computer at a fast pace. The higher the CPU clock rate, the faster it will run. All these components work together, providing an environment where high-speed multitasking occurs. The CPU cores keep switching rapidly between hundreds of different tasks per second. More all at the same time.

What is GPU?

As mentioned earlier, GPUs, or Graphical Process Units, are processors with specialized cores. These cores deliver high-intensity performance, enabling tasks to be spread across various cores.

GPUs have been a key component in sparking the AI boom, becoming a key part of modern Super Computers in gaming and pro-graphics.

Talking of Artificial Intelligence, can we be far from discussing Machine Learning and Deep Learning.

How are Artificial Intelligence, Machine Learning, and Deep Learning interlinked

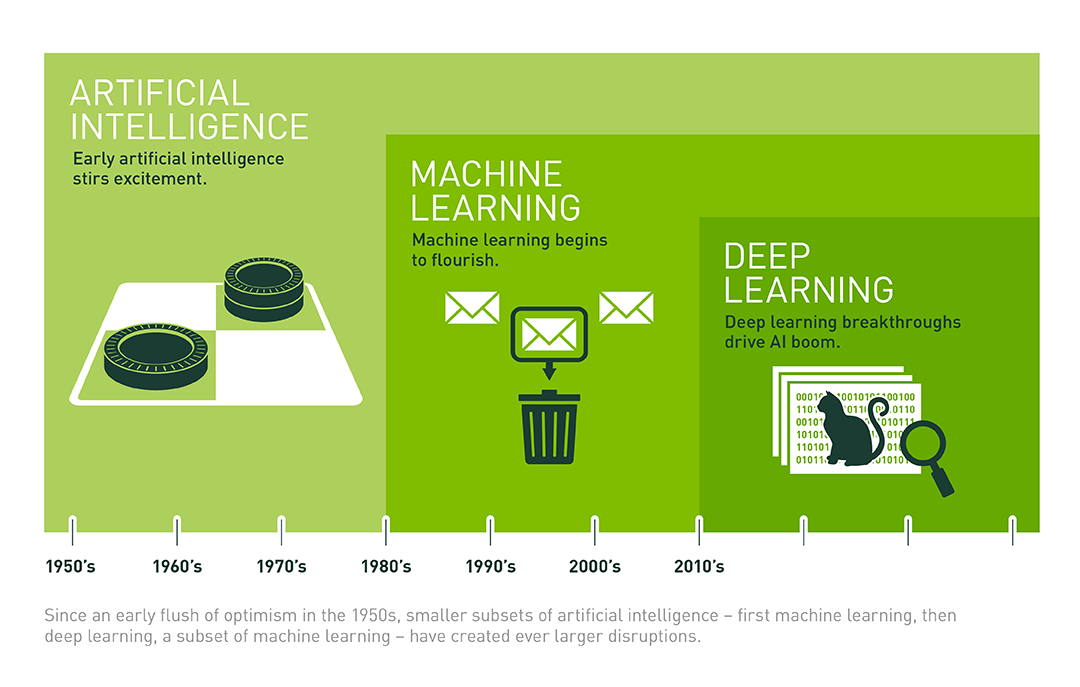

These are concepts are interconnected. One can visualize these in the form of concentric circles, the largest being AI. Then comes machine learning-which blossomed after a point, and finally, Deep Learning forms the last circle.

Talking of Deep Learning

Talking of Deep Learning, these are algorithms for machines to observe patterns. Computers acquire uncanny capabilities through deep Learning. E.g., the ability to recognize speech and translate it to another language.

NVIDIA GPU family for Deep Learning ensures executions of top-notch Deep Learning Models. The advancements in Artificial Intelligence have led to sophisticated Deep Learning algorithms thanks to the implementation of GPUs like the Nvidia Deep GPU family for Deep Learning. Deep Learning is an important concept that has helped elevate performance across industries. Implementation of Deep Learning

Deep learning relies on GPU acceleration for both the purposes of training and inference. NVIDIA delivers GPU acceleration everywhere—from data centers and the world’s fastest supercomputers to laptops and desktops. If your data happens to be in the cloud, you will find NVIDIA GPU Deep Learning to be available on services from Amazon, Google, IBM, Microsoft, and many others.

The world of computing is going through a tremendous change. With deep learning and AI, computers learn to write their software. NVIDIA GPU Deep Learning creates proficient Deep Learning Models that can enable and facilitate the solutions of complex problem structures from across different domains and categories of businesses. As machines start learning by observing patterns and data structures, lives will become a lot simpler and easier in ways that were unimaginable previously; right from healthcare to self-driving cars, deep learning is creating a world of magic possible for us.

FAQs

1. What is the NVIDIA GPU family for deep learning?

The NVIDIA GPU family for deep learning consists of several series of graphics processing units designed to accelerate artificial intelligence and machine learning tasks. These include the Tesla, Quadro, and GeForce series, as well as more specialized platforms like the NVIDIA DGX systems and the A100 GPUs. Each series caters to different needs, from personal deep learning projects to large-scale industrial AI applications.

2. How do NVIDIA GPUs accelerate deep learning tasks?

NVIDIA GPUs accelerate deep learning tasks through parallel processing capabilities, which allow them to perform thousands of operations simultaneously. This is particularly effective for the matrix and vector computations that are common in machine learning algorithms. Additionally, NVIDIA’s CUDA technology provides a programming model and software environment designed specifically for GPU computing, further enhancing performance for deep learning applications.

3. Which NVIDIA GPU is best for deep learning beginners?

For deep learning beginners, the NVIDIA GeForce series, particularly models like the RTX 3060, RTX 3070, or RTX 3080, offer a good balance of price and performance. These GPUs provide sufficient power for most entry to mid-level deep learning projects, including neural network training and inference, without the higher costs associated with more advanced models.

4. What makes the NVIDIA A100 GPU suitable for enterprise-level deep learning projects?

The NVIDIA A100 GPU is designed for the most demanding computational tasks, including enterprise-level deep learning projects. Key features that make it suitable include:

- Massive Parallel Computing Power: With thousands of CUDA cores, it can handle large-scale neural network training more efficiently.

- High Memory Bandwidth and Capacity: Essential for training complex models with large datasets.

- Multi-Instance GPU (MIG) Capability: Allows a single A100 GPU to be partitioned into smaller instances, optimizing resource utilization and providing greater flexibility for different workloads.

- Advanced Architecture: Built on NVIDIA’s Ampere architecture, offering improved performance per watt, making it more energy-efficient and cost-effective for large-scale deployments.

5. Can NVIDIA GPUs be used for tasks other than deep learning?

Yes, NVIDIA GPUs are versatile and can be used for a wide range of tasks beyond deep learning, including scientific simulations, 3D rendering, gaming, and more. Their parallel processing capabilities make them well-suited for any application requiring high computational power.

6. How do I choose the right NVIDIA GPU for my deep learning project?

Choosing the right NVIDIA GPU for your deep learning project depends on several factors:

- Project Scale: Larger projects with more complex models and bigger datasets might require GPUs with more memory and processing power, such as the Tesla or A100 series.

- Budget: Higher performance comes at a higher cost. Balance your performance needs with your available budget.

- Power and Space Constraints: High-performance GPUs may require more power and cooling, as well as physical space, which could be a consideration if you’re limited in either capacity.

- Software Compatibility: Ensure the GPU is supported by the deep learning frameworks and libraries you plan to use.

7. What support does NVIDIA offer for deep learning developers?

NVIDIA offers comprehensive support for deep learning developers, including:

- CUDA Toolkit and cuDNN: Software libraries and APIs that leverage GPUs for deep learning computations.

- NVIDIA Deep Learning SDK: A suite of tools, libraries, and resources designed to accelerate deep learning development.

- NGC (NVIDIA GPU Cloud): A hub for GPU-optimized software for deep learning, machine learning, and HPC applications.

- Developer Forums and Documentation: NVIDIA provides extensive documentation, tutorials, and community forums to help developers troubleshoot issues and share knowledge.