The Fast and Powerful Cloud for Visualization and Accelerated Computing

NVIDIA with Google Cloud is working together to help businesses address data difficulties more quickly without spending a lot of money or managing many infrastructures. NVIDIA GPUs can speed up machine learning, analytics, scientific simulation, and other HPC tasks, and NVIDIA® Quadro® Virtualization Workstations could be used with Google Cloud to speed rendering, simulation, and graphical fidelity operations from anywhere.

ON THE GOOGLE CLOUD, GPUs

Cloud-Based Anthos is a Kubernetes-based application modernization platform. Anthos combines the convenience of going in the cloud with the security of one solution for customers searching for a hybrid energy storage system and coping with high on-prem demand. It’s provided as a cloud-based hybrid solution for NVIDIA GPU workloads.

NVIDIA DGX A100 with Google Cloud Anthos

The NVIDIA DGX A100 is the world’s leading AI system, designed specifically for the needs of businesses. Organizations may now create a hybrid AI cloud that combines their existing DGX on-premise infrastructure with NVIDIA GPUs in Google Cloud to provide quick access to computing capacity. Cloud Computing Anthos on NVIDIA DGX A100 enables enterprises to supplement their specialized DGX system infrastructure’s deterministic, unrivaled performance with the ease and flexibility of cloud AI computation.

NVIDIA Tensor Core GPU A100

To tackle the world’s biggest computing challenges, NVIDIA® A100 enables unmatched speed at every level for AI, big data, and high-performance computing (HPC).

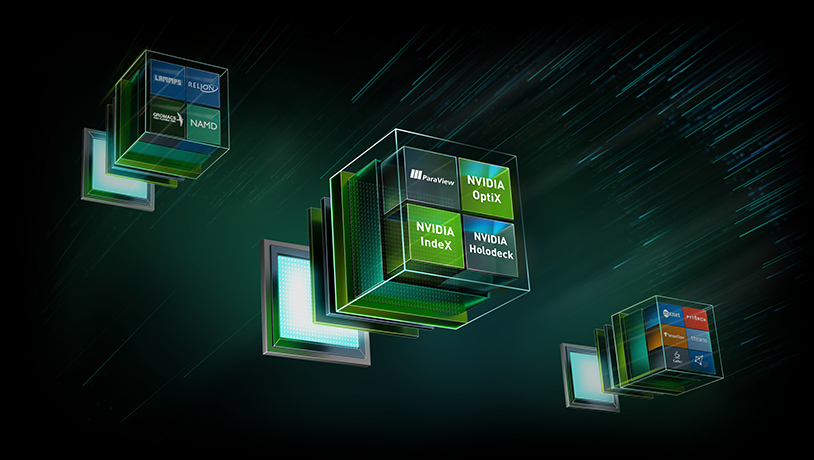

NGC GPU-Accelerated Containers NGC gives an easy number of pre and GPU-optimized containers of deep learning applications, HPC programs, and HPC visualization tools on Google Cloud, which reap the benefits of NVIDIA A100, V100, P100, and T4 GPUs. It also involves important models and scripts that can be used to create efficient models for popular use cases, including categorization, detection, and text-to-speech. In only minutes, you can launch production-quality, GPU-accelerated software.

TensorRT by NVIDIA

NVIDIA TensorRTTM is an elevated supervised neural inference planner and runtime for latency and high throughput inference applications. Improve CNN models, calibrate for reduced precision while maintaining high accuracy, and publish to Google Cloud. TensorFlow’s flexibility with TensorRT’s tremendous optimizations because it’s tightly linked with TensorFlow.

GPUs from NVIDIA and the Google Kubernetes Engine

By expanding to thousands of GPU-accelerated instances, NVIDIA GPUs inside the Google Kubernetes Platform supercharge compute-intensive apps like computer vision, image analysis, and financial modeling. Without needing to maintain hardware or virtual machines, pack your Graphics card apps into a container and benefit from the tremendous processing capacity of Google Container Engine with NVIDIA A100, V100, T4, P100, or P4 GPUs, should you need them (VMs).

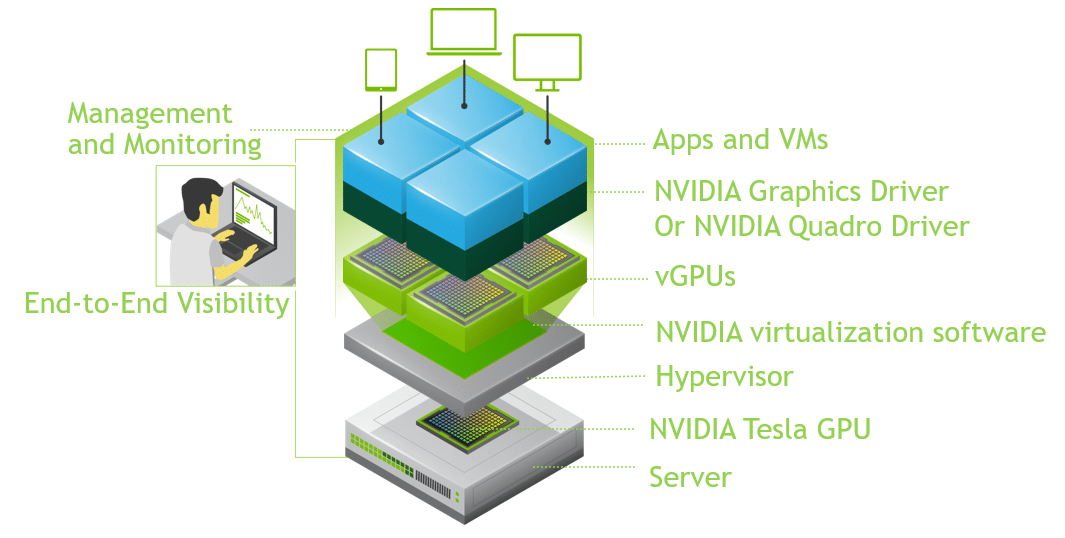

Virtualized Graphics with GPU Acceleration

NVIDIA Quadro Virtual Desktops for GPU-accelerated graphics allow creative and technical experts to work more efficiently from any location by accessing the most demanding commercial design and engineering pprogramsoveprogramsd. Designers and engineers may now run virtual workstations straight from Google with NVIDIA T4, V100, P100, and P4 GPUs.

How to start a Google Cloud GPU instance

To begin, you must first create a User Cloud account. You may accomplish this with your Gmail/Google account. It would help if you did something like this once you’ve set up your account.

Make sure your account is active as a paying account, even though they give you 300 dollars in free credits, you must upgrade the account to a premium account to utilize a GPU. Even if you upgrade their account to a premium one, you may still take advantage of Google’s $300 in free credits until you pay.

Ensure you configure the quota to 1 or the desired number of GPUs before starting a GPU instance. The quota essentially sets a restriction on how many GPUs you can use.

Type quota into the search field and select All Quotas from the results to raise your quota. To filter the results, click the filter button on the top left corner of the screen, three horizontal bars. Select ‘Limit Name’ first, and then ‘GPUs (all areas).’

When you click the ‘ALL QUOTAS’ button, it should take you to a page that displays the Global Quota. Then choose Edit Quotas from the drop-down menu.

It would help if you had something like this on your screen:

Set the GPU limitation to the desired number of GPUs. In most cases, I require one GPU. Submit your request with a brief description. Your request for a quota increase is normally responded to within a few hours, or at most 1-2 days.

The quantity of GPU memory that the project will require is something to keep in mind. If your models or input is very large, you may need a lot of GPU. It will also help in raising the RAM while creating your instance won’t help. According to my experience, the only way to expand GPU memory is to increase your number of GPUs. This graph depicts the amount of GPU memory available for each GPU type. This is a set number that you cannot alter. A CUDA out the of memory error will occur if you do not increase your GPU RAM. You must address this issue if it pertains to you.

FAQs

1. What are NVIDIA GPUs on Google Cloud Platform (GCP)?

NVIDIA GPUs on GCP are powerful graphics processing units available on Google Cloud’s infrastructure services. These GPUs can be attached to virtual machine instances for various compute-intensive tasks such as machine learning, data analytics, scientific simulations, and 3D visualizations. Google Cloud offers several types of NVIDIA GPUs, including the Tesla T4, P100, and V100, to cater to different performance and computational needs.

2. How can I benefit from using NVIDIA GPUs on GCP?

Using NVIDIA GPUs on GCP can significantly accelerate your computational workloads compared to using traditional CPUs alone. Benefits include:

- Faster Processing: GPUs provide parallel processing capabilities that are ideal for high-performance computing tasks.

- Cost Efficiency: By leveraging GPUs for specific tasks, you can complete jobs faster and more cost-effectively than using CPUs for the same workloads.

- Scalability: GCP allows you to scale your GPU resources up or down based on your computational needs, offering flexibility and control over your infrastructure costs.

- Simplified Workflow: Google Cloud’s integration with NVIDIA GPUs simplifies the setup and management of compute instances with GPU acceleration.

3. Which NVIDIA GPU models are available on GCP, and what are their use cases?

GCP offers various NVIDIA GPU models, including:

- Tesla T4: Best for machine learning inference and small-scale training or lighter graphical workloads.

- Tesla P100: Suited for general-purpose GPU computations, including medium to large-scale machine learning training tasks and high-performance computing (HPC).

- Tesla V100: Designed for the most demanding computational tasks, including large machine learning models training and HPC. Each model caters to specific performance needs and budget considerations, allowing users to select the most appropriate GPU for their projects.

4. How do I attach an NVIDIA GPU to a VM instance in GCP?

To attach an NVIDIA GPU to a VM instance in GCP:

- Navigate to the Google Cloud Console and select “Compute Engine.”

- Click “Create Instance” or select an existing instance to edit.

- Under the “Machine Configuration” section, choose the machine type and then click “CPU platform and GPU” to add a GPU.

- In the “GPU type” dropdown, select the NVIDIA GPU model you require and specify the number of GPUs.

- Complete the instance setup by configuring the remaining options and launch the instance.

- Install the necessary NVIDIA GPU drivers and CUDA toolkit on your VM instance to begin utilizing the GPU.

5. Are there any specific quotas or limitations when using NVIDIA GPUs on GCP?

Yes, GCP imposes quotas on the number of GPUs you can attach to VM instances, which vary by GPU model and region. These quotas are in place to ensure fair resource distribution among users. You may need to request an increase in your GPU quota if your project requires more GPU resources than your current quota allows. Additionally, not all GPU types are available in every region, so you’ll need to check the availability in your desired region.

6. How is the pricing structured for NVIDIA GPUs on GCP?

Pricing for NVIDIA GPUs on GCP is structured on a per-hour basis and varies depending on the type of GPU, the region in which your VM is located, and whether the instance is preemptible or not. Preemptible instances offer a lower cost in exchange for the possibility that GCP might terminate these instances if it requires access to those resources. Pricing details are transparently provided in the GCP pricing documentation, allowing users to estimate costs based on their specific requirements.

7. Can I use NVIDIA GPUs on GCP for gaming or graphical workloads?

While NVIDIA GPUs on GCP are primarily designed for computational tasks such as machine learning and scientific simulations, they can also be used for gaming and graphical workloads, including game streaming and 3D rendering. However, users should ensure compliance with Google Cloud’s terms of service and consider the latency and bandwidth requirements for interactive graphical applications to ensure a satisfactory user experience.