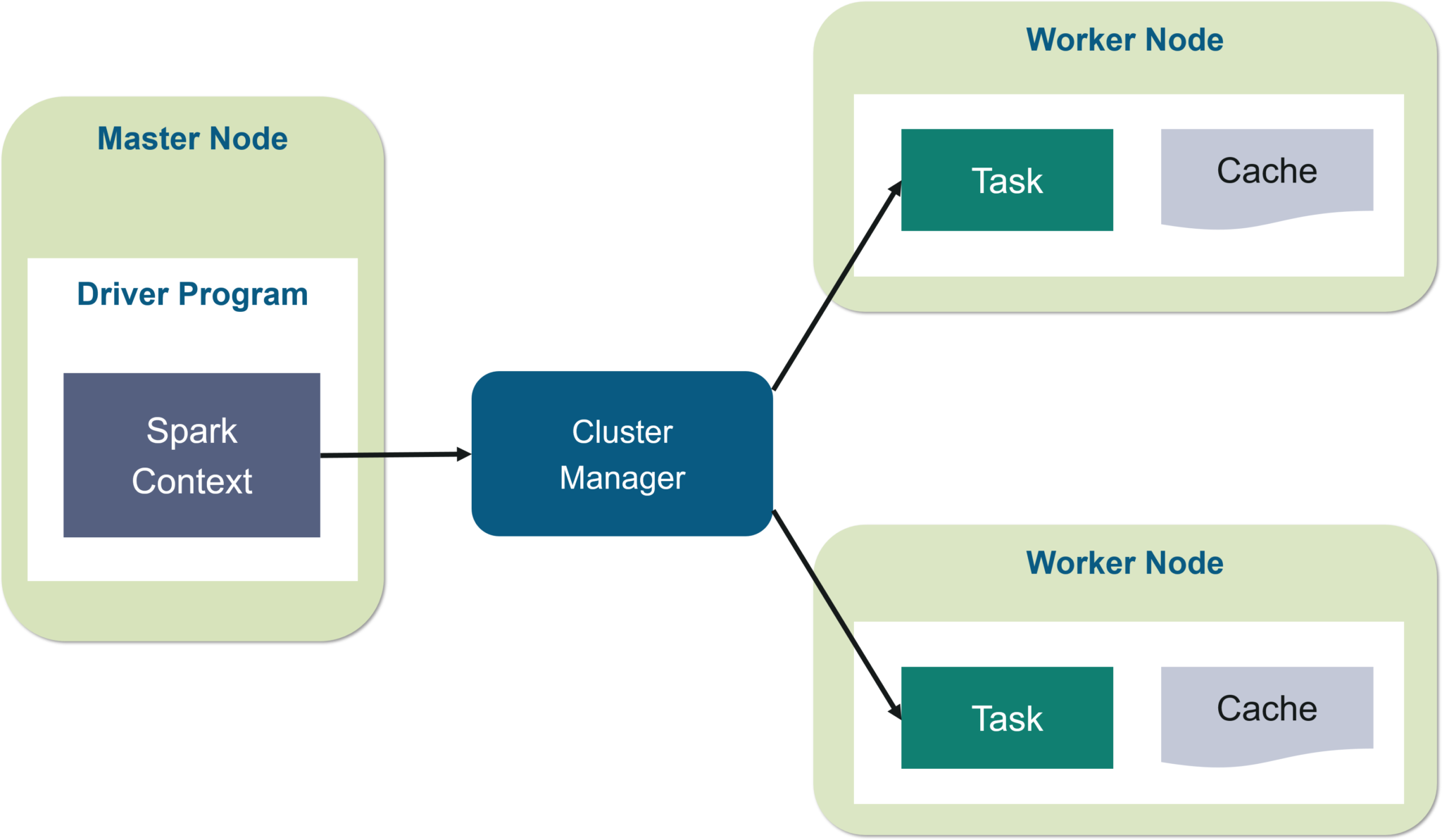

Apache Spark Distributed Computing

Apache Spark is a computational framework that can quickly handle big data sets and distribute processing duties across numerous systems, either in conjunction with other parallel processing tools. These two characteristics are critical in big data & machine learning, which necessitate vast computational capacity to process large data sets. Spark relieves developers of some of…