Suppose you are of the opinion that artificial intelligence is super special and only used by scientists for niche areas of specialization.

AI is becoming ubiquitous and indispensable in all business areas and its functions. The new reality of AI is that it is slowly becoming part and parcel of the life of a wide variety of people. It is no longer restricted to scientists to play with. AI has found a wide range of usage amongst business applications, optimization of IT processes, recommendations, and predictions, and improved employee and consumer experiences.

With Ai having an indisputable role in the future, one feels only thankful for already expecting it. In response, Intel has ensured that all its processors of data centers for the general purpose are adept with future demands and requirements and optimized well with AI. Intel provides acceleration programs for its AI, which are built-in and compatible with almost all the popular AI frameworks.

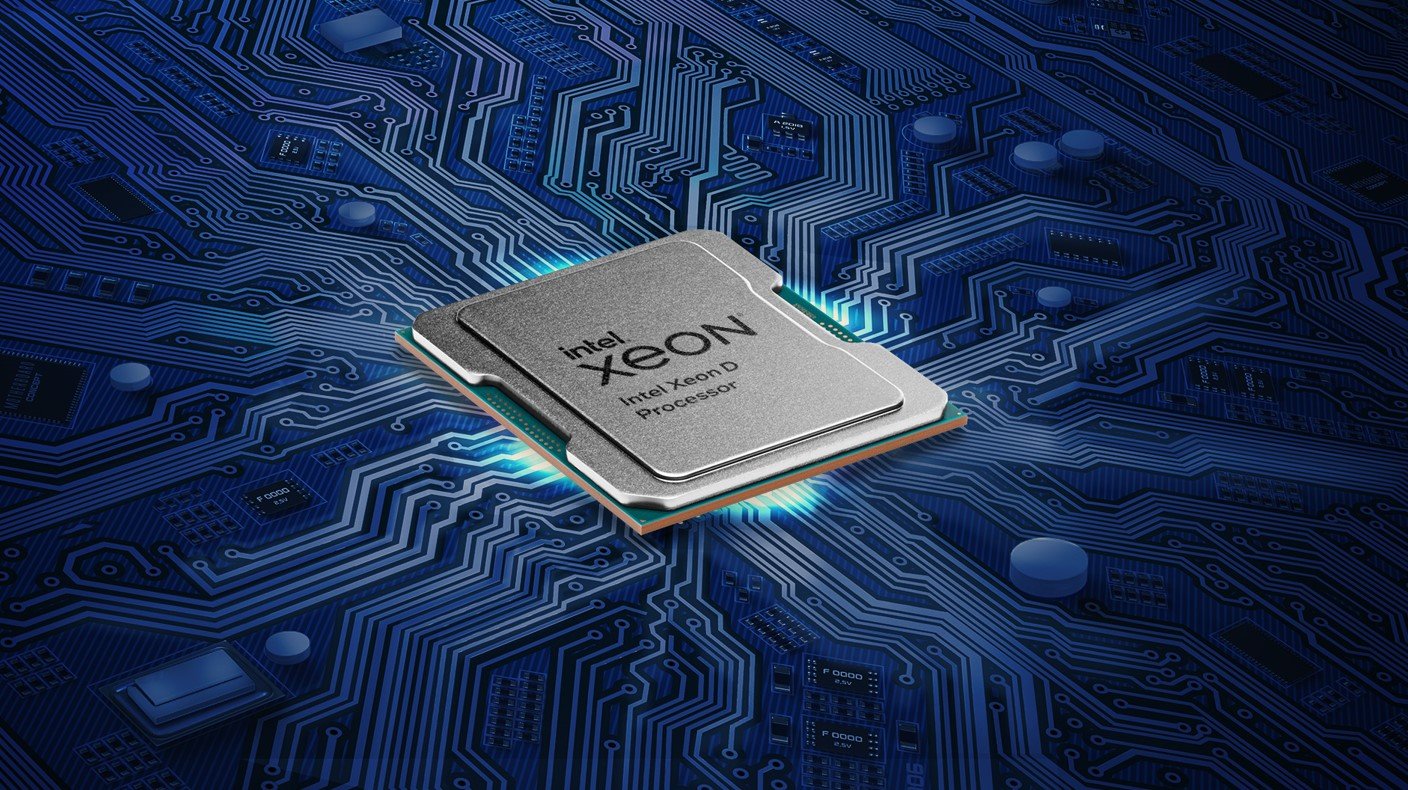

How Intel is changing the market?

The Intel Xeon scalable series processors are the only CPUs for data centers that have an in-built AI acceleration. Furthermore, Intel also enhances the hardware to increase security. You can also find the optimization of the software built right into the silicone.

The CPUs of Intel Xeon are empowered by one API standard, which runs on open-source code. This makes it easier for you to create and deploy smarter and more beneficial models for your customers. This combo of API-CPU has won a lot of attention because of its capability to simplify the ability of your consumer to migrate from AI concepts to full-on function. These updated new processors enable your customers to make a selection out of a wide range of options of verified and pre-integrated solutions for AI and data analytics. This is inclusive of database enterprises and a variety of applications.

Some of the perfect examples of Intel AI building on Xeon processors are as follows:

- Burger King – They use AI deep learning to create a recommendation system for fast food. They deploy AI with the goal of increasing sales but also improving the experiences of the customer. The system correlated various attributes such as time, location, and weather while the customer placed an order. The recommendation system works by using Apache Spark to integrate and process the data. Apache Spark is an analytical engine that is open source in nature. It was trained by the distribution of MXNet, which again is a deep learning framework that is open source, and all this uses an Intel Xeon cluster of processors.

- SK Telecom: one of the biggest mobile operators in South Korea deployed intel Xeon processors when it wanted to analyze data across all its cell towers. They constructed a complete end to end pipeline of AI. using a combination of the library of math software, tensor plow ability of intel, its unified analytics, Apache Spark, ad analytcas Zoo. all this running on a cluster of servers that is run by Xeon processors, deep learning from Intel.

- HYHY is a Chinese company that develops deep learning-oriented tech and computer vision programs. This company managed to create an imaging system that was of great help to the medical industry in diagnosing various diseases. The system relies on Intel technology to achieve its results. Xeon Scalable processors empowered by deep learning from Intel in combination with the Open Vino toolkit. It is specifically designed to use speech recognition, computer vision, natural language processing, and more.

Conclusion

Artificial intelligence is definitely the big next wave in the computing industry. We are making our world increasingly smart and more connected with each passing day. A team of Intel Xeon processors has driven developments, acting as catalysts in the upcoming AI-dominated computing era. You can use AI to tackle problems of large scale that otherwise will complicate things and can resolve time-consuming tasks. It is fair to expect an overwhelming help in quickening the process of inventions and scientific discoveries. These managing tasks are monotonous and also act as an extension of our capabilities and senses.

Deep learning is one of the fastest emerging sections in machine learning. You can train neural networks to interpret data with greater accuracy and much faster speed. These are exceptionally helpful in recognition of speech and searching images, processing natural language, or any such complex tasks. The eon processors help boost the performance of AI to the net level using its deep learning capabilities. We’ve revamped the processor technology to boost AI’s deep learning capabilities.

FAQs

1. What is the Intel oneAPI AI Analytics toolkit?

The Intel oneAPI AI Analytics toolkit is a comprehensive suite of tools designed to accelerate AI and analytics workflows on Intel hardware. It provides developers with a unified programming model and a set of optimized libraries, frameworks, and tools to build, deploy, and optimize AI, machine learning, and data analytics applications.

2. What are the key components included in the Intel oneAPI AI Analytics toolkit?

The toolkit encompasses various components such as the Intel oneAPI Base Toolkit, Intel oneAPI HPC Toolkit, Intel oneAPI IoT Toolkit, Intel oneAPI Rendering Toolkit, and Intel oneAPI IoT Analytics Toolkit. Additionally, it offers specialized libraries like Intel oneAPI Deep Neural Network Library (oneDNN), Intel Distribution for Python, and Intel Math Kernel Library (Intel MKL).

3. What programming languages are supported by the Intel oneAPI AI Analytics toolkit?

The toolkit supports multiple programming languages, including C, C++, Fortran, Python, and Data Parallel C++ (DPC++). DPC++ is an extension of C++ tailored for heterogeneous computing, allowing developers to write code executable across CPUs, GPUs, FPGAs, and other accelerators.

4. How does the Intel oneAPI AI Analytics toolkit help developers accelerate their workflows?

By offering optimized libraries and frameworks for AI and analytics tasks, the toolkit empowers developers to exploit the full potential of Intel hardware. Through these resources, developers can achieve superior performance, scalability, and efficiency in their applications.

5. Can the Intel oneAPI AI Analytics toolkit be used for both training and inference in AI models?

Absolutely, the toolkit caters to both training and inference stages of AI model development. Developers can leverage libraries like oneDNN (formerly known as Intel MKL-DNN) for deep learning training and inference acceleration, along with other included tools and frameworks for various AI tasks.

- Is the Intel oneAPI AI Analytics toolkit compatible with other popular AI and machine learning frameworks?

Yes, the toolkit is designed for interoperability with popular AI and machine learning frameworks such as TensorFlow, PyTorch, and scikit-learn. Developers can seamlessly integrate these frameworks with the toolkit to harness Intel hardware optimizations and performance enhancements.

- What resources are available for developers interested in learning more about the Intel oneAPI AI Analytics toolkit?

Intel provides extensive resources including documentation, tutorials, code samples, and more on their official website to facilitate developers in getting started with the Intel oneAPI AI Analytics toolkit. Furthermore, there are forums, community support channels, and training programs available for developers seeking additional assistance or guidance in utilizing the toolkit effectively.