Cloudera clusters should meet ever-changing security criteria set by governing agencies, companies, industries, and the public as a system meant to support huge types and quantities of data. Cloudera clusters consist of Hadoop core and ecosystem components. It is essential to protect these against various risks to ensure the confidentiality, integrity, and availability of the cluster’s services and data.

As a component of a GDPR compliance framework, Cloudera delivers a common platform to aid with the security & governance of private consumer information, with the capacity to detect breaches, implement policies, analyze data lineage, and undertake audits.

Security Prerequisites

Data management system goals, including privacy, integrity, and availability, necessitate securing the system on multiple levels. You can categorize depending on both broad operating goals and technical concepts:

You must safeguard perimeter access to the cluster against several threats by internal and external networks and by various actors. Appropriate packaging of firewalls, circuits, subnet masks, and the usage of formal and informal IP addresses, for example, can offer network isolation. Before getting access to the cluster, authentication procedures guarantee that users, processes, & applications identify themself to the cluster and show they are who they say they are.

The system should protect content in the cluster from unauthorized access, and similarly safeguard communications between cluster nodes. In the event that malicious actors capture network elements or physically remove hard disk drives from the system, encryption techniques render the contents unusable.

The system must grant authorization on a case-by-case basis for access to a specific service or piece of data inside the cluster. Once users authenticate themselves to the cluster, authorization methods restrict their access to only the information and processes for which they have received explicit authority.

Visibility The term “visibility” refers to the ability to see the history of database information and comply with data governance regulations. All activities on data and its lineage—source, changes, and so on—are logged thanks to auditing methods.

You can secure the cluster to fulfill specific business requirements using security capabilities within the Hadoop environment and external security infrastructure. You can use different security techniques at different levels.

Levels of Security

The diagram below depicts the many levels of security that you can configure for just a Cloudera cluster. This ranges from non-security to compliant ready. The security you set for the cluster should rise as the complexity and volume of information on the ensemble grow.

Security Architecture for Hadoop

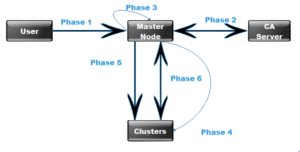

The diagram below shows some of the numerous components at work in a Cloudera enterprise cluster in production. The diagram emphasizes the importance of securing clusters that ingest data from local and foreign sources that may span many data centers. You must apply authentication or access controls across these multiple inter-and intra-connections and to all customers who want to query, perform operations, or even browse the data in the cluster.

You can authenticate external digital data using Flume and Kafka’s built-in protocols. To develop and submit jobs, data scientists & BI analysts can utilize interfaces like Hue to interact with data from Impala and Hive, for example. You can secure all of these exchanges with Kerberos authentication.

Transparent HDFS encryption using an enterprise-grade Keys Trustee Server you can use to encrypt data at rest.

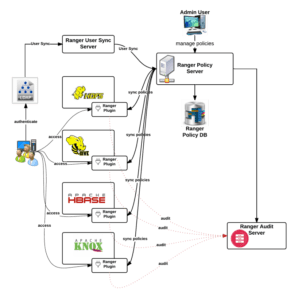

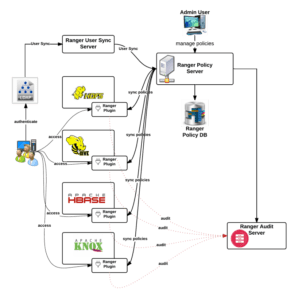

You can use both Ranger (for services like Hive, Impala, or Search) and HDFS Access Controls to enforce authorization policies.

You can use Apache Ranger to give auditing features.

Non-secure

There is no security setup. You should not use clusters that aren’t secure in production because they are subject to attacks and exploits. Only for proof-of-concept, demo, and throwaway platforms are these platforms recommended.

1st-class security

Login, authorization, and auditing are all configured. Users & services can only access the cluster after verifying their identities. Thus, you can set up authentication first. Then, you can use authorization techniques to assign rights to the system and user groups. Procedures for auditing maintain track of who has access to the clusters (and how). Platforms for development are recommended.

2. Data Governance and Security

To protect sensitive information, you employ encryption, with key management systems handling the management of encryption keys. You can perform auditing on data in Meta storage, with regular checks and updates to the system’s metadata. Furthermore, you must configure the cluster to facilitate the identification of the provenance of any data object. This ensures proper data governance. It’s a good idea to use it for staging and execution.

3. Ready to Comply

All data at rest and in transit is encrypted in the secure CDP cluster, and the access control solution is fault-tolerant. Auditing procedures adhere to industry, regulatory, and regulatory standards (for example, PCI, HIPAA, and NIST) and extend beyond CDP to other systems that connect with it. Cluster administrators have received extensive training, an expert has approved security processes, and the group can withstand technological scrutiny. All production platforms are recommended.