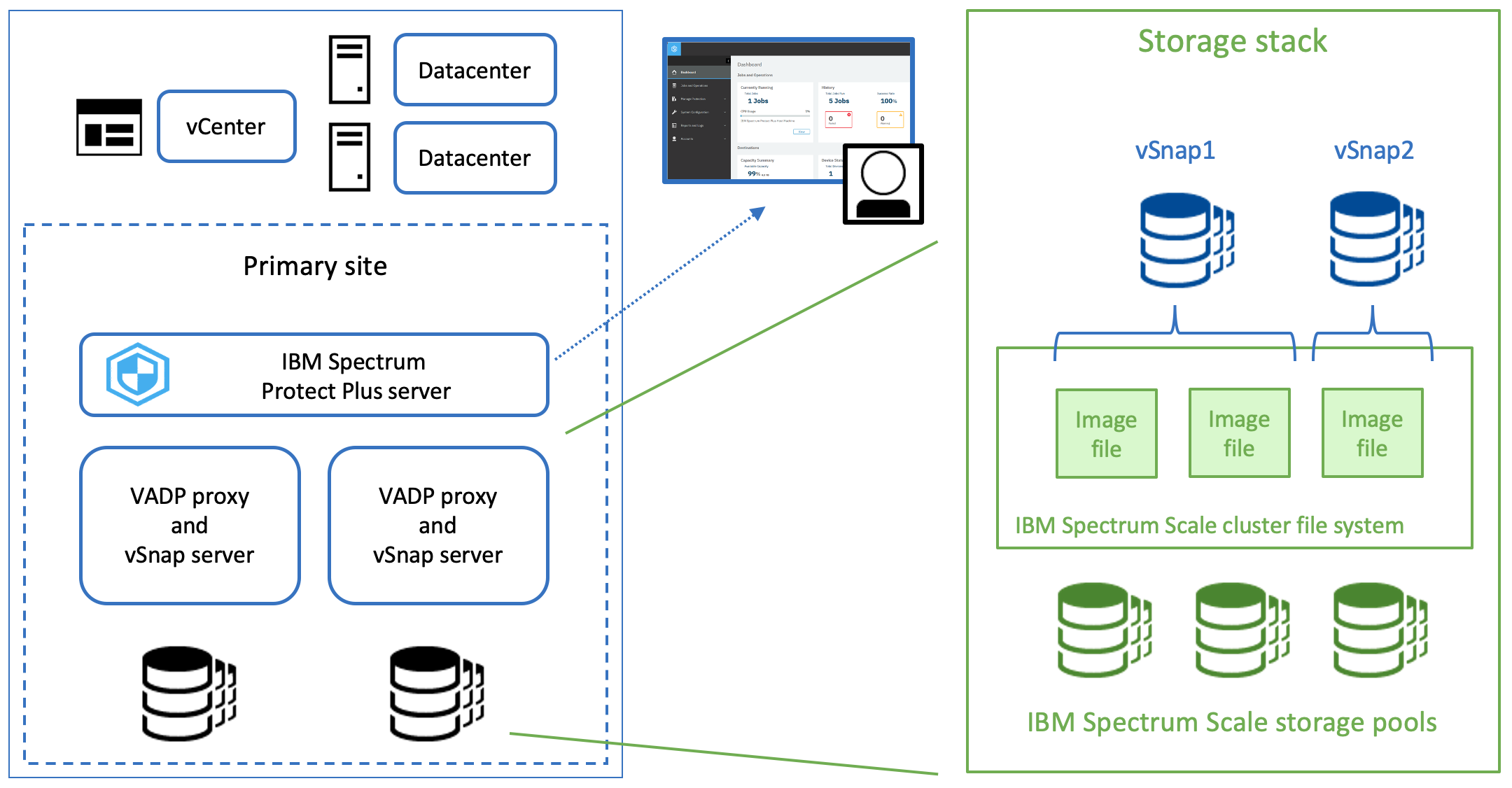

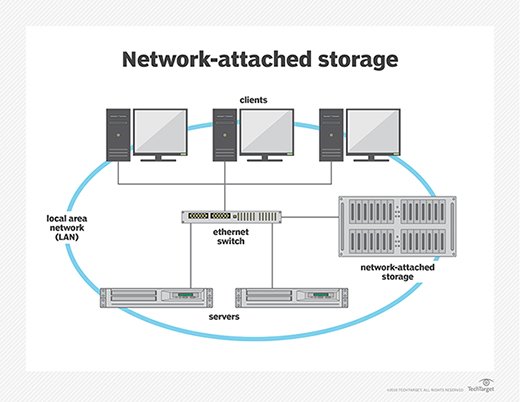

GPFS is a cluster file system that allows multiple nodes to access a single file system or set of file systems simultaneously. The nodes can be SAN-attached, network-attached, a combination of the two, or in a shared-nothing cluster configuration. This enables high-performance access to this common data set, which can support a scale-out answer or provide a high-availability platform.

Beyond common accessing data, GPFS has many features, such as data replication, policy-based storage management, and multi-site operations. A GPFS cluster can be made up of AIX® nodes, Linux nodes, Windows server nodes, or a combination of the three. GPFS can run on virtualized instances in environments that use logical partitioning or other hypervisors to provide common data access.

Tuning any system necessitates a continuous review of all system settings. You need to understand how the system behaves under different conditions, and access to performance data collection tools. Tuning a GPFS cluster presents additional challenges because performance is determined by system internals and external elements, necessitating specific tuning techniques. Using a structured approach for analyzing information and reaching the correct conclusions for every change you want to make is critical.

As a result, you must plan ahead of time the various tests you intend to perform (including the order in which they must be performed) and meticulously document the testing results (data collection). Various tools are available to document the system configuration, monitor performance counters, and distribute/verify changes. A thorough understanding of these tools and the mindset required to optimize each of these steps will help you accelerate the process, save you time later on to conduct extra tests, and result in more precise test results.

To alter the configuration characteristics, use mmchcluster as well as mmchconfig instructions.

After you’ve configured the GPFS cluster, users can modify its configuration using the mmchcluster and mmchconfig commands.

To perform the following tasks, use mmchcluster command:

- Modify the cluster’s title.

- Modify the remote shell and file copy programs the cluster nodes will use. These instructions must follow the syntax of the ssh and SCP instructions but may use a different authentication method.

- Turn on or off the cluster configuration repository (CCR).

- Look at the Cluster configuration data files subject matter in the IBM Spectrum Scale: Notions, Planning, and Installation Guide for further data.

You can also perform the following tasks using the conventional server-based (non-CCR) configuration repository: v Modify the server nodes in the major or minor GPFS cluster configuration. The major or minor server can be moved to another GPFS node in the network. For the instruction to be successful, that node must be accessible.

Attention: If one or either of the old server hubs goes down while switching to a new major or minor GPFS cluster configuration server, you must run the mmchcluster -p LATEST instruction as quickly as the old servers come back up. The inability to do so may result in GPFS operations being disrupted. v, Bring the primary GPFS cluster setup server node up to date. If you run the mmchcluster instruction and it fails, you will be compelled to rerun it with the -p option LATEST to synchronize all nodes inside the GPFS cluster. Synchronization directs all nodes in the GPFS cluster to use the predominant GPFS cluster setup server that was most recently stipulated.

Configuring the GPFS environment

The performance of GPFS depends on the correct use of its tuning parameters and the tuning of the infrastructure on which it is based. It is critical to understand that the following guidelines are provided “as is,” and there is no guarantee that following them will result in improved performance in your environment.

The upkeep and tuning of the GPFS environment involve determining 175 tuning parameters based on a given workload generated by real-world applications, as well as overall design considerations and knowledge of the infrastructure, including hardware, storage, networking, and the operating system. As a result, it is critical to assess the impact of changes as thoroughly as possible under similar or reproducible load conditions, utilizing the infrastructure in the production environment.

Given the challenge of reproducing the behavior of client applications and appliances using load-generation or reference point tools, experts generally recommend assessing performance changes in response to changing control variables in the real customer environment. It is preferable to conduct assessments under conditions that closely resemble the actual manufacturing state. Implementing change management and a backup plan strategy is also critical to restore the cluster to its previous state after each test.

FAQs

How do you configure GPFS for optimized performance?

To configure GPFS for optimized performance, you should consider factors such as file system layout, disk striping, and caching settings. Additionally, adjusting parameters related to I/O concurrency, read-ahead, and write-behind operations can further enhance performance.

What is file system layout, and how does it impact GPFS performance?

File system layout refers to the arrangement of data across physical storage devices in a GPFS cluster. Properly distributing data across disks and nodes helps balance I/O load, reduce contention, and maximize throughput, thereby improving overall performance.

What role does disk striping play in GPFS performance optimization?

Disk striping involves distributing data across multiple disks in parallel, allowing for simultaneous read and write operations. Configuring disk striping in GPFS ensures efficient utilization of storage bandwidth and enhances I/O performance, especially for large files and high-throughput workloads.

How can caching settings be adjusted to optimize GPFS performance?

Caching settings in GPFS control the behavior of data caching and prefetching mechanisms. By adjusting cache size, eviction policies, and prefetching parameters, you can optimize GPFS performance by reducing disk I/O latency and improving data access speed.

What considerations should be taken into account for I/O concurrency in GPFS?

I/O concurrency settings determine the degree of parallelism for read and write operations in GPFS. Configuring optimal concurrency levels based on workload characteristics and hardware capabilities helps maximize system throughput and minimize I/O bottlenecks.

How does adjusting read-ahead and write-behind operations affect GPFS performance?

Read-ahead and write-behind operations in GPFS prefetch data into cache ahead of time and delay disk writes, respectively. Fine-tuning these settings can improve data access latency and throughput by minimizing disk I/O wait times and reducing contention for storage resources.

Are there any tools or utilities available to assist in configuring GPFS for optimized performance?

IBM provides tools such as mmperfmon and mmhealth for monitoring and analyzing GPFS performance metrics. Additionally, the GPFS documentation offers guidelines and best practices for performance tuning, helping administrators configure GPFS clusters to achieve optimal performance levels.